I’m JavaScript developer and I feel great when the Machine Learning model runs on client-side (in the browser). I will cover several topics in this post, I believe you will find them useful, if you will decide to run ML model in the browser:

- How ML model was trained in Python

- How ML model was converted to be compatible with TensorFlow.js

- JavaScript Web Worker to run TensorFlow.js predict function

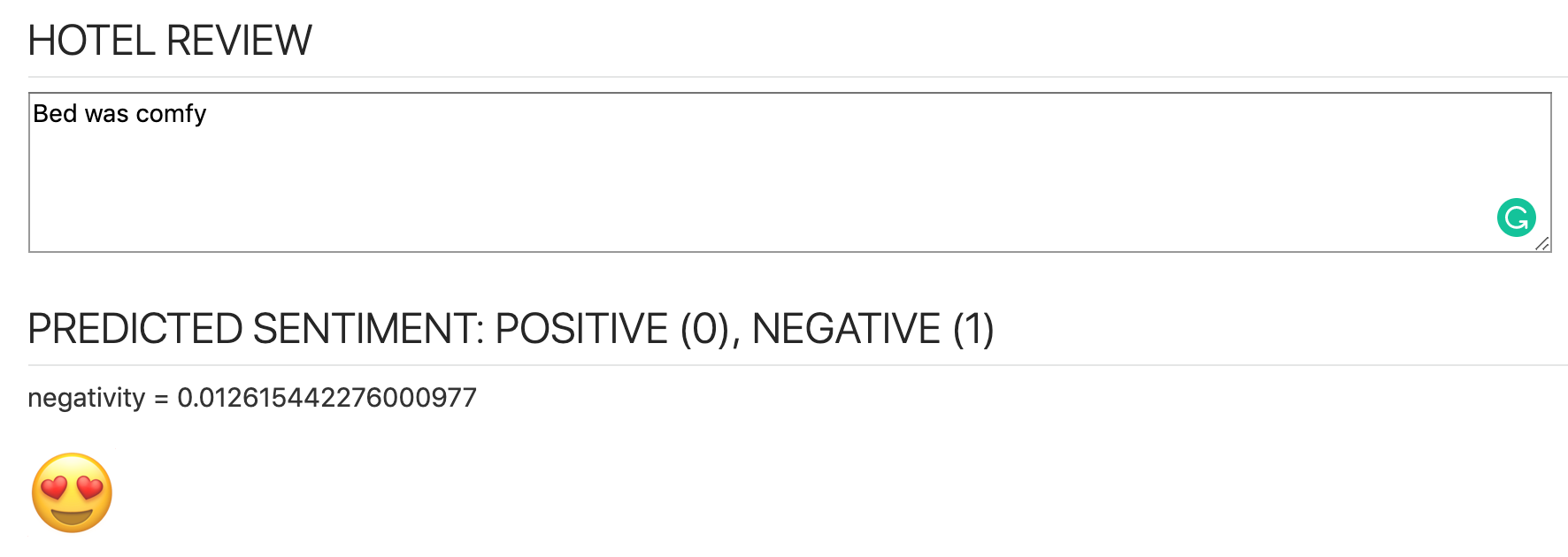

I’m using ML model to predict sentiment for a hotel review. I found this excellent dataset on Kaggle — 515K Hotel Reviews Data in Europe. It provides 515K records for hotel reviews taken from Booking.com. Using this dataset I trained Keras model, converted it to be compatible with TensorFlow.js and then included into the client-side JS app. You can check how it works yourself, the app is deployed on Heroku — https://sentiment-tfjs-node.herokuapp.com/:

Try typing Bed was comfy, you will get very low negativity score:

Then add — Very noisy outside, negativity score will increase to neutral sentiment:

How ML model was trained in Python

Text sentiment classification is implemented using approach explained in Zaid Alyafeai post — Sentiment Classification from Keras to the Browser. I will not go deep into an explanation of how to build text sentiment classification, you can read it in Zaid post.

To be able to train the model, I processed Hotel review dataset and extracted out of all attributes hotel review text and sentiment (0 — positive, 1 — negative). I was using a subset of data to train the model, to reduce training time. First 100k reviews were used by me:

df = pd.read_csv('hotel-reviews.csv', nrows=100000)

df['REVIEW_TEXT'] = df['REVIEW_TEXT'].astype(str)

print('Number of rows ', df.shape[0])

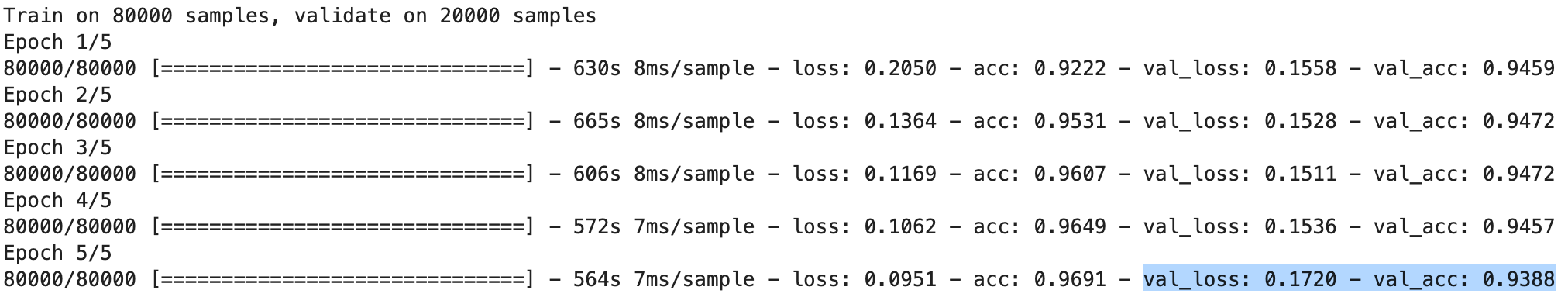

The model was trained in 5 epochs, using batch size = 128 (larger batch size allows faster training). The validation set was assigned with 20% of training data:

model.fit(np.array(X_train_tokens), np.array(Y_train),

validation_split=0.2, epochs=5, batch_size=128)

Model training was successful with high validation accuracy of 94%:

When the model is trained — there are two more things to do. Create a dictionary file, it will be used to encode user sentences to be processed by client-side predict function. Save model with the optimizer, to be able to convert it to TensorFlow.js friendly representation.

Make sure when saving model, the training process was done once (you was not running training multiple times, before saving it — or shutdown Python notebook and do new training from scratch) — otherwise something gets overloaded with the model in the saved file and model could not be reused by TensorFlow.js.

How ML model was converted to be compatible with TensorFlow.js

Once the model is saved, convert it to TensorFlow.js format — as result two new files will be created (model bin file and JSON file with metadata):

tensorflowjs_converter --input_format keras hotel-reviews-model.h5 tensorflowjs/

Read more here — Importing a Keras model into TensorFlow.js to get info on how to convert existing Keras model to TensorFlow.js and then load it into TensorFlow.js.

JavaScript Web Worker to run TensorFlow.js predict function

Why do we need to use Web Worker for TensorFlow.js predict function call? Predict function call is not asynchronous, this means it blocks main JavaScript thread and user screen will be frozen until prediction completes. Sentiment analysis prediction takes several seconds and UI freezing would annoy users. Web Worker allows delegating a long-running task to separate JavaScript thread, without blocking the main thread.

JavaScript application is based on Oracle JET toolkit (collection of open source libraries — knockout.js, require.js, etc.). To run the sample app on your computer, execute these commands:

- npm install -g @oracle/ojet-cli

- From app root folder run: ojet restore

- From app root folder run: ojet serve

There is a function in appController.js, which is being called each time when the user stops typing text — call to predict sentiment is invoked. This function creates Web Worker (if such doesn’t exist) and sends user input to Web Worker (input is sent encoded and ready to be used for Tensor creation):

if (!worker) { worker = new Worker("js/worker.js"); }worker.postMessage(seq); worker.onmessage = function (event) { console.log('prediction: ' + event.data); self.negativityScore(event.data); self.sentiment(convertToSentiment(event.data)); };

The event listener is registered to handle return message (prediction) — it is pushed to UI through the knockout observable variable.

Web Worker is implemented in worker.js file. This file runs in its own context and you should reference TensorFlow.js library here implicitly. Make sure to set CPU backend, TensorFlow.js in Web Worker works only with CPU backend:

importScripts('https://cdn.jsdelivr.net/npm/setimmediate@1.0.5/setImmediate.min.js');importScripts('https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@1.2.7/dist/tf.min.js');tf.setBackend('cpu');

This is the main code (message listener in Web Worker) — where TensorFlow.js predict function is called (with user input converted to tensor) and the result is being returned back to the main thread through message:

onmessage = async function (event) { console.log('executing worker'); seq = event.data; if (!model) { model = await createModel(); } input = tf.tensor(seq); input = input.expandDims(0); predictOut = model.predict(input); score = predictOut.dataSync()[0]; postMessage(score); predictOut.dispose(); };async function createModel() { const model = await tf.loadLayersModel('ml/model.json') return model }

Resources:

- Source code — GitHub

- Sample app live — https://sentiment-tfjs-node.herokuapp.com/

1 comment:

Thank you for sharing this informative post. Looking forward to read more.

Professional Website Development Services India

Post a Comment