Deploying Iris Classifications with FastAPI and Docker

TLDR;

We deploy a FastAPI application with Docker to classify irises based on their measurements. All of the code is found here.

Iris Dataset

The Iris dataset is a simple, yet widely used example for statistical modeling and machine learning. The data contains 150 observations evenly distributed between 3 iris subclasses: setosa, versicolor, virginica. Each observation contains the corresponding subclass along with these measurements: sepal width, sepal length, petal width, petal length (all units are in cm).

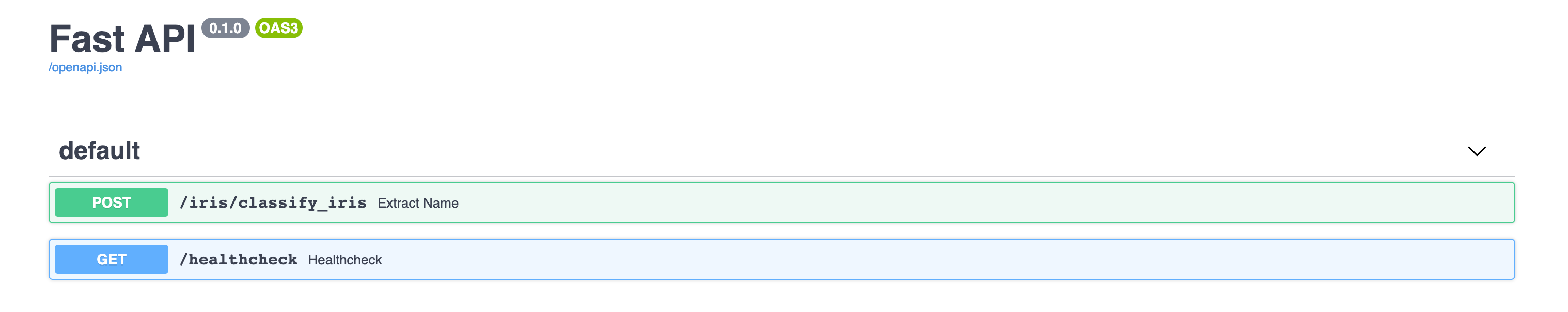

FastAPI

FastAPI is a modern, fast (high-performance), web framework for building APIs with Python 3.6+ based on standard Python type hints.

The FastAPI website provides more detail on how FastAPI works and the advantages of using FastAPI. True to its name, FastAPI is fast. On top of that, the setup is very simple and the framework comes with a nifty UI (more about the UI part later).

Step 0: Prerequisites

- Install Docker

- Follow the instructions on the README to ensure that you have all dependencies installed (

pip install requirements.txt)

We will use Docker and FastAPI to deploy our model as a REST API. An API (Application Programming Interface) is a set of functions and procedures that define how other applications interact with your code. REST APIs takes advantage of HTTP methods such as

GET, POST, PUT, DELETE to manage this interaction.

In this example, we will only be using two HTTP methods:

GET:used to retrieve data from the applicationPOST:used to send data to the application (for inference)

When we send a HTTP request, the server issues a status code. The status code informs the status of the request. We can classify status codes based on the first digit:

- 1xx: informational response

- 2xx: successful request

- 3xx: further action is required to complete the request

- 4xx: request failed because of client error (i.e. bad syntax)

- 5xx: request failed because of server issues (i.e. feature not implemented)

With our application, we should only except to see 2xx, 4xx and 5xx codes. The

200 OK status code is the one we want to see.

Create a new directory called

iris. This directory will contain all the code used for building the application.Step 1: Train a Simple Classifier

For simplicity, let’s use Logistic Regression as our algorithm. We can use

sklearn to supply the Iris dataset and to do the modeling.

I structured the classifier as a class for readability.

train_model is used to train the classifier and classify_iris is used to classify new observations following this format:{'sepal_w': <sepal width>, 'sepal_l': <sepal length>, 'petal_w': <petal_width>, 'petal_l'l: <petal length>}

While I didn’t do so here, we can also elect to add additional methods to provide insight into model performance or for interpreting the coefficients. This information can be retrieved from our endpoint via a

GET request.Step 2: Define the router

In the same directory as the classifier script we wrote in Step 1, create a folder called

routers which will hold our routers. Routers are used to break up complex APIs into smaller pieces. Each router will have its own prefix to mark this separation. While this is not immediately necessary, I decided to use routers in case our API becomes more complex.

In the

routers directory, create a script called iris_classifier_router.py. This routes /classify_iris to its corresponding function. The response is the same as the one generated by IrisClassifier.classify_iris()Step 3: Define the app

In the same directory as the

routers folder, create another script app.py. In this script, define the app and specify the router(s).

We also define a

healthcheck function. A health check API returns the operational status of the application. Our health check returns the cheery phrase Iris classifier is all ready to go! when the application is healthy. Note that this invokes a 200 status code.Step 4: Include Dependencies, Dockerfile

By this step, the file structure should look like this:

├── iris

│ ├── iris_classifier.py

│ ├── app.py

│ └── routers

│ └── — — iris_classifier_router.py

│ ├── iris_classifier.py

│ ├── app.py

│ └── routers

│ └── — — iris_classifier_router.py

Go one directory up to create a

requirements.txt file to specify all of the dependencies required to build this app.

My

requirements.txt looks like this:fastapi==0.38.1

numpy==1.16.4

scikit-learn==0.20.1

uvicorn==0.9.0

We also need to create a

Dockerfile which will contain the commands required to assemble the image. Once deployed, other applications will be able to consume from our iris classifier to make cool inferences about flowers.

The

Dockerfile can also be found in the GitHub repo linked above.

The first line defines the Docker base image for our application. The python:3.7-slim-buster is a popular image — it’s lightweight and very quick to build. The second line specifies the maintainer. Maintainer information can be uncovered by using

docker inspect.

The next couple of lines are very generic. Let’s skip ahead to line 14

EXPOSE 5000. This signifies that the port is useful, but actually do anything.

Our Dockerfile concludes with a

CMDwhich is used to set the default command to uvicorn --host 0.0.0.0 --port 5000 iris.app:app. The default command is executed when we run the container. This particular command is what is needed to kick off our application.Step 5: Run the Docker Container

Build the docker container using

docker build . -t iris. This builds a container with everything in the repository and tags it as iris. This step may take a while to finish.

After the container is built, generate the docker image using

docker run -i -d -p 8080:5000 iris. This sets the container port 5000 to be available on your localhost 8080. Running the container also kicks off the default command we set earlier — which effectively starts up the app!Testing

Get a healthcheck with

curl 'http://localhost:8080/healthcheck'

To get an inference, use this request. The JSON string after

-d is the model input. Feel free to play around with it!curl 'http://localhost:8080/iris/classify_iris' -X POST -H 'Content-Type: application/json' -d '{"sepal_l": 5, "sepal_w": 2, "petal_l": 3, "petal_w": 4}'

Remember the cool UI I mentioned earlier? Go to

localhost:8080/docs to check it out! This makes testing requests so much easier.

Hope you enjoyed and stay tuned for next time!

If you are interested in reading more about model deployment, I have another article about deploying a Keras text classifier with TensorFlow Serving.

No comments:

Post a Comment