Most serious data science projects should take place in a Docker container or a virtual environment. Whether for testing or dependency management, it’s just good practice, and containerizing gives you greater power for debugging and understanding how things work together in a unified scope. This post is about creating a virtual environment in Python 3, and while the documentation is seemingly straightforward, there are few major points of clarity I’d like to point out, especially when using Jupyter notebooks and managing kernels and dependencies.

What is venv?

The Python 3 module ‘venv’ comes with Python 3’s standard library. This means it’s built in when you download Python 3, and so you shouldn’t have to worry about installing it. Creating an actual virtual environment is quite simple:

python3 -m venv choose_your_venv_nameThe first command is ‘python3’. This instructs the command line to run Python 3 scripts. Followed by ‘-m’, this option further instructs Python3 to look for a module name, in this case ‘venv’. The last argument is the name of your virtual environment, which should be relevant to the context of your intended project (e.g. ‘statsbox’ for a statistics-based virtual environment).

Running this command will create a directory with your chosen name inside whatever directory you’re currently in, so be careful to cd your way to your intended directory before running it. After a few seconds, your virtual environment should be created. For example, I ran the following command inside my home directory:

python3 -m venv tutorial_boxAfter a few seconds, I ran ls to check my home directory, and alas, ‘tutorial_box’ is there. If we cd into any virtual environment’s directory upon immediate creation, we find that it’s not empty!

bin include lib pyvenv.cfgGreat. These are actually your basic ‘blank slate’ files which are necessary in order to get your virtual environment up and running. Just because you created a virtual environment doesn’t mean you’re truly inside of it (activated) yet. This is an important conceptual distinction that I find can confuse intro learners. Even if you cd into the virtual environment directory (like above), your command-line commands, your Jupyter notebooks, and your packages will still be determined by your global environment’s PATH variable. That means that any Jupyter notebook you open will still have access to any packages or modules you’ve installed in your global environment, and if you’ve unwittingly produced a genius machine-learning model which is dependent on a package version which cannot be compiled in a different environment, then your code will break in that environment. This is common when, say, attempting to deploy a data science app with Heroku, Streamlit, Flask, Dash, etc.

It’s a good metaphor to think of a virtual environment (or Docker container) as a literal computer, a mini-computer inside your computer. In other words, just because you have the new mini-computer inside your computer doesn’t mean you’ve turn it on yet.

To do so, activate your virtual environment in the command line:

source bin/activateThat’s it! To know it’s working, you should see the name of your virtual environment in the bottom left with parentheses, preceding your usual computer’s current directory path. In this case, it’s (tutorial_box)

Finally, we’ve created and activated our virtual environment. If you run Python 3 manually in the terminal, you’ll be operating in a fresh environment, and you’ll have to pip install any modules and packages you need for a new project from scratch. But remember, this is a good thing! It will save you from dependency hell down the line.

…but why are my Jupyter notebooks still using my global packages?

This is the fundamental point of clarity I’d like to make in this tutorial.

So, we’ve activated our virtual environment. Any Python 3 commands we run will be limited in scope to our fresh virtual environment. So what happens when we open a new Jupyter notebook?

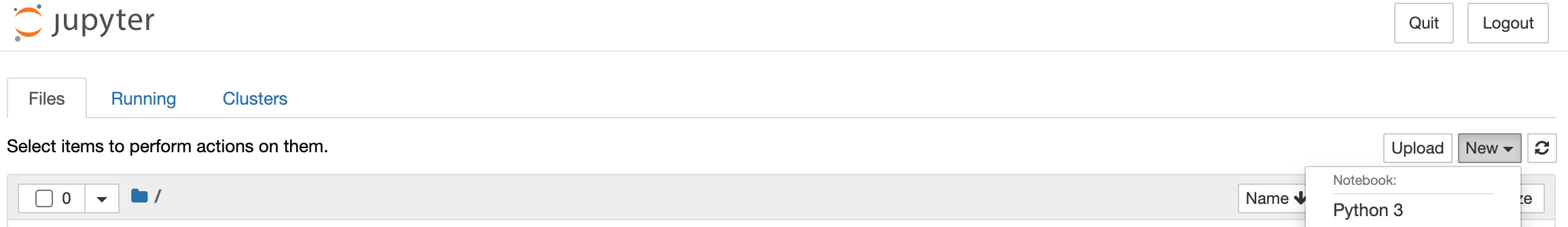

jupyter notebookWell, Jupyter should still open in the browser, but if we attempt to create a new notebook, we should see the following kernel option:

Under the ‘New’ notebook tab, we see ‘Python 3’. This is quite confusing: we have an option to create a new Jupyter notebook, but we have a potential list of kernels to choose from. In a way (somewhat different from virtual environments), these kernels are themselves virtual Python environments which will run independently of your virtual environment, even if it’s activated!

The default Python 3 kernel which appears will actually be your global Python 3 environment all over again, which completely defeats the point of using a virtual environment, since we want to control exactly which packages and modules we’re using from a blank slate.

In order to add a new kernel to your Jupyter environment which matches the virtual environment you’ve just created with venv, you need to run the following command inside of an already activated virtual environment:

pip install --user ipykernelThis will install the kernel-creator in your virtual environment, and you’ll always need to run it in a newly-created virtual environment since it’s a blank slate and will not have ipykernel installed. Then, in order to finally add the kernel to your Jupyter notebooks’ kernel option list, run the following:

python -m ipykernel install --user --name=whatever_you_want There are some important points here. First, the whatever_you_want name can literally be whatever you want, and it doesn’t have to match the name of your virtual environment. All that matters is that the kernel you create with this command will contain all of the packages and modules inside of your currently-activated virtual environment. It, therefore, makes sense to give it a name that relates to your virtual environment, but wisely with a version number attached. Over time you may add more packages to your virtual environment, and then you’ll add that virtual environment state as a new modified kernel with ipykernel . Eventually, something will break, or some installed or updated package will give you a slightly different machine learning model, and you’ll want to revert to a previous Jupyter kernel. Appending your own version numbers to these kernels solves this problem, and is a virtual environment’s kind of localized version control.

Alternatively, you can always create a requirements.txt file with the command:

pip freeze > requirements.txt

That .txt file can then be installed elsewhere (everything inside of it, rather), such as a different virtual environment or Docker container.

Alternatively, I would recommend using pip-compile , which is a pip-tools package. It’s incredibly helpful for automatically generating a requirements.txt file from a manually-typed requirements.in file. It’s incredibly useful, because pip-compile automatically detects all of the required dependencies for packages listed in the requirements.in file.

That covers it all. Using venv , ipykernel , and pip-compile are all you really need to create fresh virtual environments, and hopefully, this post has clarified how they are tied together with Jupyter notebooks, kernels, and dependency management.

Thanks for reading!

No comments:

Post a Comment