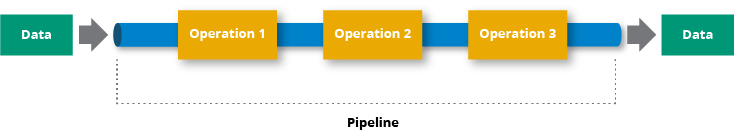

What is a data pipeline?

A data pipeline consists of a sequence of processes for processing data. The data is ingested at the beginning of the pipeline if it has not yet been loaded into the data platform. In the following phases, each process produces an output that serves as the input for the next step. This continues until the pipeline is complete. Independent steps may be executed simultaneously in certain instances.Components of data pipeline:-

A source, a processing step or stages, and a destination are the three main components of a data pipeline.

Data Pipeline Considerations:-

Numerous considerations must be made while designing data pipeline designs. For instance,

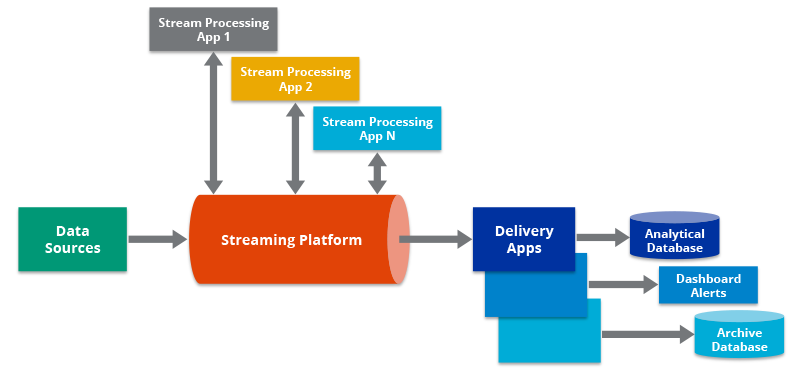

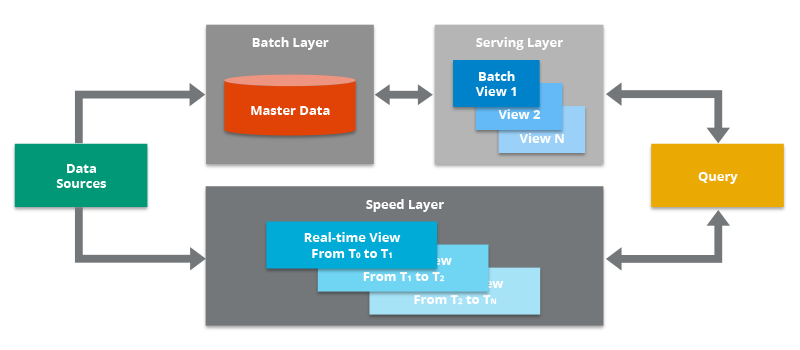

- Does your pipeline need the ability to handle streaming data?

- What kind of data volume do you anticipate?

- How much and what sort of processing does the data pipeline require?

- Is the data created in the cloud or on-premises, and if so, where should it go?

- Are you planning to construct the pipeline using microservices?

- Is there a particular technology in which your team is already proficient at developing and maintaining?

Source/Reference: Towards Data Science article by Satish Chandra Gupta, hazelcast dot com

Scalable and efficient data pipelines are as important for the success of analytics, data science, and machine learning as reliable supply lines are for winning a war

No comments:

Post a Comment