In this article I share real MCQs, as well as my 3 top tips to get ready for the exam.

A note for my readers: This post includes affiliate links for which I may earn a small commission at no extra cost to you, should you make a purchase.

A Lack Of Resources To Practice?

If you are in the process of studying for the Databricks Associate Developer for Apache Spark 3.0 certification you are probably facing the same problem I faced a few weeks ago: a lack of mock tests to assess your readiness.

By now, you should know that the exam consists of 60 MCQs and that you will be given 120 mins to answer correctly to at least 42 of them (70%).

Another particular I suppose you have noticed is that the exam will cost you $240 (including VAT) but you will be allowed a single attempt, such that if you fail, you will have to pay again to retake it. With these premises, I guess you really wish to nail the exam at the first shot.

But you might be wondering: “If I can’t find any examples of questions that are representative of the level of difficulty of the exam, how can I actually understand if I am ready or not?”.

This is the same dilemma I run into just before sitting the exam: I was not sure to be ready enough to get beyond the 70% threshold and probably I wasn’t, as I found real questions being more challenging than expected.

I found real questions being more challenging than expected.

Despite some struggles (also technical as my exam was paused by the proctor for 30 mins), I managed to clear the certification with a good mark after preparing for around 2 months.

There are at least a dozen of other articles on Medium on this topic (I read them all as part of my preparation) and I found 3–4 of them really insightful (links at the end of the article), but none of them included any mock multiple choice questions that helped me to test my knowledge.

In this article I share with you 10 MCQs for the PySpark version of the certification, that you can expect to find in the real exam.

For this reason, in this article, I share with you 10 MCQs for the PySpark version of the certification, that you can expect to find in the real exam. Please note that I am not allowed to disclose the exact questions, so I have rephrased them, by keeping the level of difficulty intact, so you can trust them being a valuable study resource.

Before jumping on the actual questions, let me give you 3 tips that you won’t probably find in other articles, but that I reckon could make a huge difference in terms of the final mark you could get.

My Honest Tips To Pass The Exam

No, I won’t suggest you peruse Spark - The Definitive Guide or the 2d Edition of Learning Spark as…you already know about them…right? What I am going to give you are 3 tips that will disproportionally increase your chance to succeed - so read carefully!

#1 Learn to navigate the PySpark documentation by heart

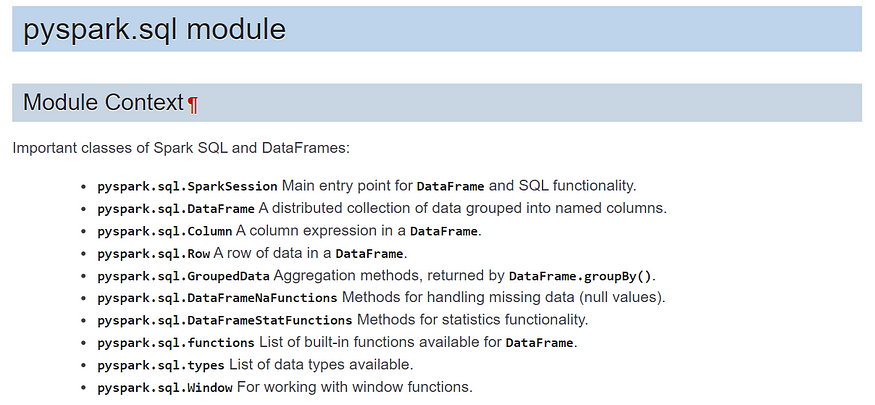

This is probably the best suggestion I can give you to excel. In effect, during the exam, you will be allowed to refer to the pyspark.sql documentation on your right screen, but you won’t be able to use CTRL+F to search for keywords.

This means that unless you know where to find specific methods or specific functions, you could waste a lot of time scrolling back and forth and - trust me- this will make you nervous. Instead, I suggest you to focus on the content of the following three classes:

- pyspark.sql.DataFrame: for instance, you should be able to locate the

coalesce()andjoin()functions. - pyspark.sq.Column: for instance, you should know that

when(),between()andotherwiseare applied to columns of a DataFrame and not directly to the DataFrame. - pyspark.sql.functions: for instance, you should know that functions used to manipulate time fields like

date_add(),date_sun()andfrom_unixtime()(yes I got a question on this function! Nowhere to be found in the books…) are described here.

I approached and studied the structure of the documentation only 2 days before the test, but I wish I did it much earlier as this knowledge helped me to answer correctly to at least 7–8 questions.

Hence, what I am trying to convey here is not to overestimate your knowledge on the PySpark syntax as your memory could betray you during the exam. Take full advantage of the documentation instead.

#2 Check this course on Udemy: Databricks Certified Developer for Spark 3.0 Practice Exams

It turns out that actually 2 full mock tests for Python/Pyspark are available on Udemy and include 120 practice exam quiz for the Apache Spark 3.0 certification exam!

I purchased access to the tests 2 months before the exam, as I wanted to study the material based on real questions and review the topics for which I got wrong answers.

Like in the real exam, you have 2 hours to complete the test and the weight of each topic is also respected, that means:

- Spark DataFrame API Applications (~72%)

- Spark Architecture: Conceptual understanding (~17%)

- Spark Architecture: Applied understanding (~11%)

In my case, at least 12–15 questions in the actual exam were very similar to questions I practiced in these tests (both in terms of phrasing and solutions), so I reckon it is an excellent investment while you are studying for the certification.

#3 Don’t only run the code examples in the official guides. Go one step further.

With hindsight, one thing I could have done better while preparing, was to experiment running many more functions and methods belonging to the Spark DataFrame API and carefully review their syntax in detail, instead of just focusing on the code snippets on the books.

Think about that: you will find at least 40–43 questions around the Spark DataFrame API, therefore it is fair to expect that a large variety of concepts will be tested (even concepts that you won’t find mentioned in the books — life sucks!).

Also, bear in mind that a good 30% of these 40–43 questions are going to be particularly tricky, with at least two very similar options, so that you will need to be extremely sure about the syntax. But remember: worst-case scenario you can always consult the documentation (that brings us back to point #1).

Now it’s time for some quizzes!

Question # 1

Given a dataframe df, select the code that returns its number of rows:A. df.take('all')

B. df.collect()

C. df.show()

D. df.count() --> CORRECT

E. df.numRows()

The correct answer is D as df.count() actually returns the number of rows in a DataFrame as you can see in the documentation. This was a warm-up questions, but don’t forget about it as you could find something similar.

Question # 2

Given a DataFrame df that includes a number of columns among which a column named quantity and a column named price, complete the code below such that it will create a DataFrame including all the original columns and a new column revenue defined as quantity*price:df._1_(_2_ , _3_)A. withColumnRenamed, "revenue", expr("quantity*price")

B. withColumn, revenue, expr("quantity*price")

C. withColumn, "revenue", expr("quantity*price") --> CORRECT

D. withColumn, expr("quantity*price"), "revenue"

E. withColumnRenamed, "revenue", col("quantity")*col("price")

The correct answer is C as the code should be:

df.withColumn("revenue", expr("quantity*price"))

You will be asked at least 2–3 questions that involve adding a new column to a DF or renaming an existing one, so learn the syntax of withColumn() and withColumnRenamed() very well.

Question # 3

# Given a DataFrame df that has some null values in the column created_date, complete the code below such that it will sort rows in ascending order based on the column creted_date with null values appearing last.df._1_(_2_)A. orderBy, asc_nulls_last("created_date")

B. sort, asc_nulls_last("created_date")

C. orderBy, col("created_date").asc_nulls_last() --> CORRECT

D. orderBy, col("created_date"), ascending=True)

E. orderBy, col("created_date").asc()

The correct answer is C as the code should be:

df.orderBy(col("created_date").asc_null_last())

but also df.orderBy(df.created_date.asc_null_last()) would work.

In effect, like for asc() in answer E, asc_null_last() does not take any argument, but is applied to column to return a sort expression based on ascending order of the column, and null values appear after non-null values.

Question # 4

Which one of the following commands does NOT trigger an eager evaluation?A. df.collect()

B. df.take()

C. df.show()

D. df.saveAsTable()

E. df.join() --> CORRECT

The correct answer is E as in Apache Spark all transformations are evaluated lazily and all the actions are evaluated eagerly. In this case, the only command that will be evaluated lazily is df.join() .

Below you find some additional transformations and actions that often appear in similar questions:

Transformations Actions

orderBy() show()

groupBy() take()

filter() count()

select() collect()

join() save()

limit() foreach()

map(), flatMap() first()

sort() count(), countByValue()

printSchema() reduce()

cache()Question # 5

Which of the following statements are NOT true for broadcast variables ?A. Broadcast variables are shared, immutable variables that are cached on every machine in the cluster instead of being serialized with every single task.B. A custom broadcast class can be defined by extending org.apache.spark.utilbroadcastV2 in Java or Scala or pyspark.Accumulatorparams in Python. --> CORRECTC. It is a way of updating a value inside a variety of transformations and propagating that value to the driver node in an efficient and fault-tolerant way.--> CORRECTD. It provides a mutable variable that Spark cluster can safely update on a per-row basis. --> CORRECTE. The canonical use case is to pass around a small table that does fit in memory on executors.

The correct options are B, C and D as these are characteristics of accumulators (the alternative type of distributed shared variable).

For the exam remember that broadcast variables are immutable and lazily replicated across all nodes in the cluster when an action is triggered. Broadcast variables are efficient at scale, as they avoid the cost of serializing data for every task. They can be used in the context of RDDs or Structured APIs.

Question # 6

The code below should return a new DataFrame with 50 percent of random records from DataFrame df without replacement. Choose the response that correctly fills in the numbered blanks within the code block to complete this task.df._1_(_2_,_3_,_4_)A. sample, False, 0.5, 5 --> CORRECT

B. random, False, 0.5, 5

C. sample, False, 5, 25

D. sample, False, 50, 5

E. sample, withoutReplacement, 0.5, 5

The correct answer is A as the code block should bedf.sample(False, 0.5, 5) in fact the correct syntax for sample() is:

df.sample(withReplacement, fraction, seed)

In this case the seed is a random number and not really relevant for the answer. You should just remember that it is the last in the order.

Question # 7

Which of the following DataFrame commands will NOT generate a shuffle of data from each executor across the cluster?A. df.map() --> CORRECT

B. df.collect()

C. df.orderBy()

D. df.repartition()

E. df.distinct()

F. df.join()

The correct answer is A as map() is the only narrow transformation in the list.

In particular, transformations can be classified as having either narrow dependencies or wide dependencies. Any transformation where a single output partition can be computed from a single input partition is a narrow transformation. For example, filter(), contains() and map() represent narrow transformations because they can operate on a single partition and produce the resulting output partition without any exchange of data.

Below you find a list including a number of narrow and wide transformations, useful to revise just before the exam:

WIDE TRANSORM NARROW TRANSFORM

orderBy() filter()

repartition() contains()

distinct() map()

collect() flatMap()

cartesian() MapPartition()

intersection() sample()

reduceByKey() union()

groupByKey() coalesce() --> when numPartitions is reduced

groupBy() drop()

join() cache()Question # 8

When Spark runs in Cluster Mode, which of the following statements about nodes is correct ?A. There is one single worker node that contains the Spark driver and all the executors.B. The Spark Driver runs in a worker node inside the cluster. --> CORRECTC. There is always more than one worker node.D. There are less executors than total number of worker nodes.E. Each executor is a running JVM inside of a cluster manager node.

The correct answer is B as in Cluster Mode, the cluster manager launches the driver process on a worker node inside the cluster, in addition to the executor processes. This means that the cluster manager is responsible for maintaining all Spark worker nodes. Therefore, the cluster manager places the driver on a worker node and the executors on separate worker nodes.

Question # 9

The DataFrame df includes a time string column named timestamp_1. Which is the correct syntax that creates a new DataFrame df1 that is just made by the time string field converted to a unix timestamp?A. df1 = df.select(unix_timestamp(col("timestamp_1"),"MM-dd-yyyy HH:mm:ss").as("timestamp_1"))B. df1 = df.select(unix_timestamp(col("timestamp_1"),"MM-dd-yyyy HH:mm:ss", "America/Los Angeles").alias("timestamp_1"))C. df1 = df.select(unix_timestamp(col("timestamp_1"),"America/Los Angeles").alias("timestamp_1"))D. df1 = df.select(unixTimestamp(col("timestamp_1"),"America/Los Angeles").alias("timestamp_1"))E. df1 = df.select(unix_timestamp(col("timestamp_1"),"MM-dd-yyyy HH:mm:ss").alias("timestamp_1"))

The correct answer is E as the appropriate syntax for unix_timestamp() is:

unix_timestamp(timestamp, format)

This functions does not include a timezone argument as it is meant to use the default one. Also, in PySpark, the correct method in order to rename a column inside a function is alias() .

Question # 10

If you wanted to:1. Cache a df as SERIALIZED Java objects in the JVM and;

2. If the df does not fit in memory, store the partitions that don’t fit on disk, and read them from there when they’re needed;

3. Replicate each partition on two cluster nodes.which command would you choose ?A. df.persist(StorageLevel.MEMORY_ONLY)

B. df.persist(StorageLevel.MEMORY_AND_DISK_SER)

C. df.cache(StorageLevel.MEMORY_AND_DISK_2_SER)

D. df.cache(StorageLevel.MEMORY_AND_DISK_2_SER)

E. df.persist(StorageLevel.MEMORY_AND_DISK_2_SER) --> CORRECT

The correct answer is E as the right command should be:

df.persist(StorageLevel.MEMORY_AND_DISK_2_SER)

In fact for Spark DataFrames, the cache() command always places data in memory and disk by default ( MEMORY_AND_DISK). On the contrary, the persist() method can take a StorageLevel object to specify exactly where to cache data.

Bear in mind that data is always serialized when stored on disk, whereas you need to specify if you wish to serialize data in memory (example MEMORY_AND_DISK_2_SER).

Conclusion

In this article I shared 10 MCQs (multiple choice questions) you should use to prepare for the Databricks Apache Spark 3.0 Developer Certification. These questions are extremely similar to the ones you are going to bump into in the real exam, hence I hope this will be a valuable study resource for you.

If you found this material useful, feel free to let me know in the comments as I would be happy to write a PART 2 including 10 more quizzes.

For the time being, I will leave you with some other articles on Medium that touch base on more general topics related to the certification.

No comments:

Post a Comment