The full stack Data Scientist is the much coveted poster-child hire for all data roles. This person (essentially a unicorn) has strong business acumen, statistical background, modelling capabilities and data engineering know-hows.

On one hand, the full stack Data Scientist is working with engineering teams to deploy models to production and on the other, distilling complex machine learning ideas to business stakeholders.

I’m still trying to navigate what it means to be this type of mythical Data Scientist. Through this journey, my abilities as a developer and independent thinker grew in parallel to my holistic understanding of the data workflow.

I hope to convey my learnings through this series, which I anticipate will span across 5 instalments. Each part will intersperse my own musings followed by a technical portion relevant to one aspect of the data workflow.

In this article, I will introduce the beginnings of the holistic data workflow and walk through the first step of the data journey: setting up and connecting to a database.

Estimated time for setting up the database: < 30 minutes

The Holistic Workflow Part 1

My first experience in the world of data introduced me to a small sliver of the entire process. Another person had extracted the data and passed that to me in a nicely structured format. The problem was well defined (someone else had done that already) and I was responsible for cleaning the data and fitting a model to quantify that relationship.

In a world with fast automation where Google’s AutoML is quickly surpassing the capabilities of individuals, that type of Data Science role is becoming obsolete. Today’s top dollar Data Scientists are being paid for their critical thinking and not for these typesetter tasks.

Every data product begins with a business problem.

This was one of the most valuable lessons I learned so far. As “scientists”, we have a natural curiosity to do what’s cool and intellectually stimulating. Whenever I read a new paper or learn about a new technique, my first intuition is always to dream up an application where I can realize it in code.

But that’s not the right order of operations. The solution should follow the problem, and not the other way around. At the end of the day, our impact is measured by the lift we drive to the company’s KPIs and not by the novelty of our approach.

Thus, every data product should begin with the identification of a business problem. The business scoping involves a problem quantification (what is the expected impact of my work?) and collaboration with domain experts and non-technical stakeholders to understand the value drivers.

Following the problem identification, we need to retrieve the data.

Sometimes, we are lucky and the data is already nicely captured. Other times, we may need to construct new workflows to obtain this data. While not ideal, manual annotation is not out of the question either.

This step involves familiarity with the datawarehouse/data lake/data marts and the ETL workflows which keeps this data fresh.

The data storage unit is more than a collection of our training data. It also becomes the foundation of our feedback loop (the ideal workflow will write new data points with their ground truths into the same table(s) for the next training process to make the model even smarter) and can also be used to log model performance and help with model version control.

This is also where our practical example will kick in — working and connecting to an actual database. The next article will dive into ETL workflows, orchestrators and the such.

Set up a Postgres Database using Heroku

Heroku is a platform as a service (PaaS) that enables developers to build, run, and operate applications entirely in the cloud.

Heroku is a high level service built on top of AWS with a value proposition based on convenience. Heroku services, including Heroku Postgres, are ridiculously easy to set up. We’ll be using different Heroku services frequently for the rest of this series, so it’s definitely worth creating an account for!

- Create a Heroku account

- (Optional, but recommended) Install the Heroku Command Line Interface

- Go to the dashboard and click

Newto create a new app

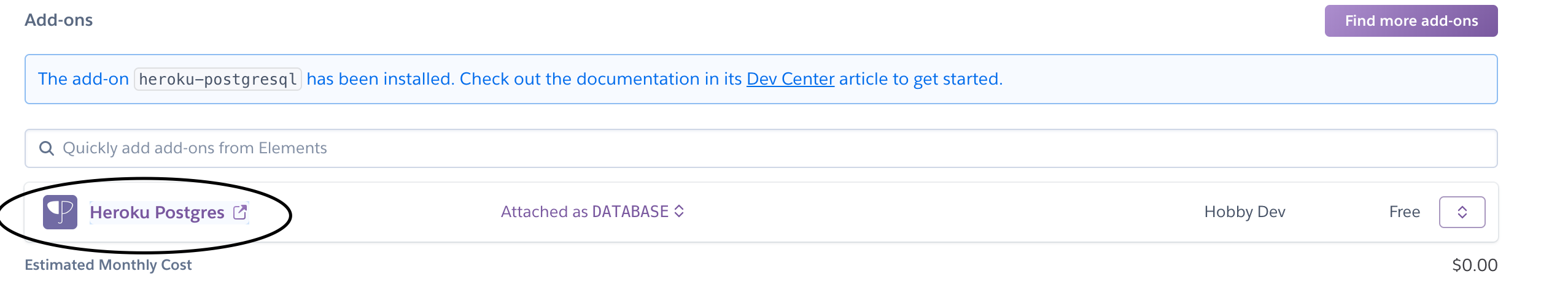

4. Under the

resources tab, search for Heroku Postgresas an add on

I selected

Hobby dev (the free plan) for my database. The free plan limits the database to 10,000 rows, which should be enough for most hobby projects.

Amazing — now we have our Postgres database!

Connecting to the Database using Python

We can connect to the database using Python or from a SQL GUI (e.g. pgAdmin). The SQL GUI is easier to use for playing around with the database and testing queries, but does not actually fit within our workflow. Possibly in a later article, I will go through setting up the SQL editor.

Python Dependencies

- sqlalchemy

- pandas

sqlalchemy is used to create an engine which connects to the database. The SQLAlchemy website proudly mentions that the tool is used by esteemed organizations such as Dropbox, Yelp and reddit.pandas is a staple in every Data Scientist’s toolkit — it supports reading SQL outputs into dataframes as well as writing dataframes into SQL tables.Database URI

The URI (Unique Resource Identifier) uniquely identifies the database and includes all of the credentials required for accessing the DBAPI (database API).

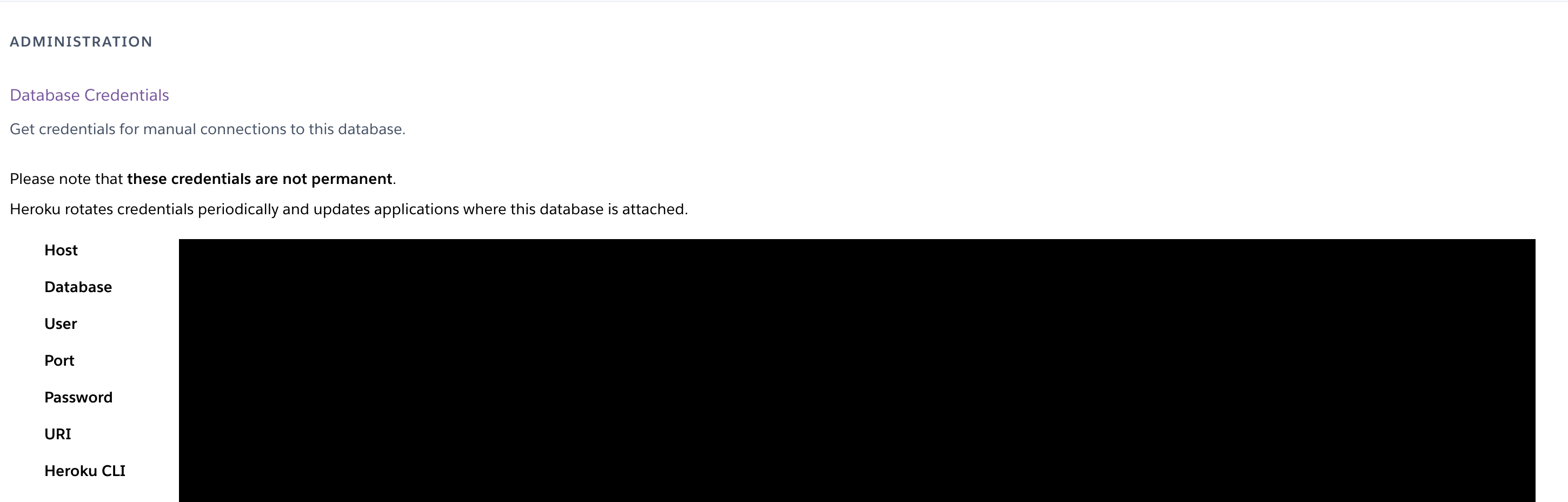

On Heroku, the URI can be found by clicking into the database add-on on the app page.

On the add-on page, click the

settings tab.

The

Database Credentials section should be the first thing on the page (there is a view credential button on the right to show the actual credentials). Like any good administrator, I’ve kept my credentials hidden.

You’ll notice that one of the credentials is the URI itself. This is the string that you need to connect to the database. The string is constructed using the host, database, user, port, password.

The format of the URI string is:

postgresql://{user}:{password}@{host}:{port}/{schema}

The first term corresponds to the SQL dialect — since our database is a Postgres database, we specify

postgresql.

Most SQL GUIs will require typing in each credential separately and not as a part of the URI.

Bringing in the Python

In a few lines of Python, we can use

sqlalchemy to create the engine and establish the connection. We’ll use pandas to work with tables in the connection.import pandas as pd from sqlalchemy import create_engineURI = REDACTEDengine = create_engine(URI)# Execute a SQL query using pandas df = pd.read_sql_query('select 1', con=engine)# Write a pandas dataframe into the database df.to_sql('table_name', con=engine)

That’s 1 of 10,000 rows used!

Note that Heroku limits the number of connections to 20 and that this simple exercise up one. After closing the Python session, the connection to the database gets freed up.

We can modify the code to close the connection after we are done to be mindful about resources.

engine = create_engine(URI)conn = engine.connect()# Execute a SQL query using pandas df = pd.read_sql_query('select 1', con=conn)# Write a pandas dataframe into the database df.to_sql('table_name2', con=conn)conn.close()engine.dispose()

That’s all for today! In this article, we set up a Postgres database and connected to it via Python. This is essentially the foundation of every data warehouse — a myriad of connections to each service’s DBAPI.

Stay tuned for next time :-)

2 comments:

That was a great post. very useful.

also, check Full-stack training in Nagpur

Great post, keep sharing Mern Stack Course in Pune

Post a Comment