as previously published on my blog.

Recently, I started studying Reinforcement Learning and was fascinated by the potential it possesses. In this blog, we will have a quick discussion over the terms, Q-Learning and OpenAI gym library. Finally, we will be implementing a simple Q-Learning application on one of the gym environments.

Let us start with Q-Learning.

What is Q-Learning?

Q-Learning is an algorithm designed to find the best possible decision to take, given a current state. Let us imagine what a child will do if it is just kept at a random state and somewhere around it, lies a bar of chocolate. The child will try many times to stand, crawl, and fail many times, and ultimately will get the chocolate. This is similar to what a q-learning algorithm does. An agent is exposed to a surrounding completely unknown to it. It takes some random actions, gets the reward or punishment due to those actions and learns to take further decisions.

You may be wondering if it does the same thing as supervised learning. The answer is NO. In reinforcement learning, evaluative learning happens, whereas in the supervised case, it is instructive. In supervised learning, weights are updated using the pre-defined labels, so that the model does not predict the wrong class further. In reinforcement learning, the agent tries every possible action and can keep predicting the wrong class if it is getting a higher reward for it and goes for the right class once it finds it.

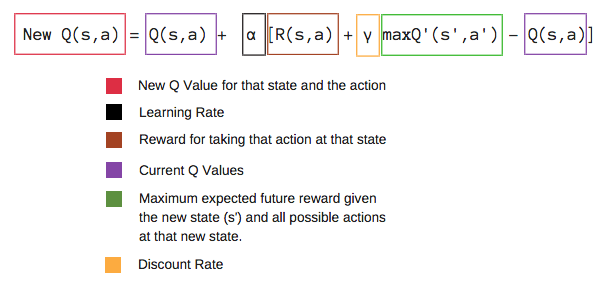

Mathematically, it can be defined as follows:

The above equation tells us how we update a q-table. We need to understand the term q-table obviously here. q-table can be considered as a matrix having values for each {state, action} pair. When in a particular state, the agent will take the action which corresponds to the maximum value. Initialising the q-table depends upon the heuristics the same as in the case of neural-network weights. We can update the values of the q-table(q-values) by the equation given above.

Alpha is the learning rate having a value between 0 and 1. The discount factor gamma is also a value between 0 and 1 but is typically kept high as it shows how much does the q-value of future decision affects the current q-value. R(s, a) is the reward the agent gets for taking an action, a being in a state, s. maxQ’(s’, a’) is the maximum reward to be expected by considering all possible actions in the new state, s’.

Using Gym for Q-Learning

Now, let us talk about gym. It is a library provided by OpenAI which simulates various environments and plots them, which helps us visualise our q-learning algorithm easily.

It can be installed by running the following command:

$ pip install gym

You must ensure that matplotlib is also installed in your system. If not, you can do it by running the command given below:

$ pip install matplotlib

We will be using MountainCar-v0 environment in our code as it is pretty simple to understand and has only 3 possible actions as follows:

0 - the car does nothing

1 - the car moves left

2 - the car moves right

1 - the car moves left

2 - the car moves right

Our goal is to make the car learn to reach the flag. In this problem, the car gets a negative reward for all other states and 0 reward when it reaches the flag.

Before writing the code, let us understand some gym commands.

1. gym.make –> used for creating an environment

2. environment.observation_space –> the co-ordinates of the agent in the environment

3. environment.action_space –> all the possible actions for the agent

4. environment.step –> takes action as an input and returns a new state, reward and the boolean, done

5. environment.render –> plots the steps taken by the agent

Note: Every environment has an upper limit of number of steps, so if you just leave the agent in some infinite loop, it will stop taking actions after the maximum number of steps. In mountainCar-v0, the maximum number of steps is 200.

We will train our model for some episodes, which is nothing but the number of trails the agent makes, and update the q-table after each step it takes.

Last thing to note is that we will be discretizing the observation space, as the observation space given in the environment is a continuous one and we need it to discretize so that we can update our q-table.

Let us dive into the coding part now:

import numpy as np

import gymenv = gym.make("MountainCar-v0")

num_actions = env.action_space.n

dis_obs_space_size = [30]*len(env.observation_space.high)

# you can tweak the dis_obs_space as rectangular patch also, here its [30,30]

dis_obs_space_winsize = (env.observation_space.high-env.observation_space.low)/dis_obs_space_size

learning_rate = 0.2

episodes = 5000

gamma = 0.9

def cont2Discrete(state):

dstate = (state-env.observation_space.low)/dis_obs_space_winsize

return tuple(dstate.astype(np.int))

q_table = np.random.uniform(low=-2, high=0, size=(dis_obs_space_size + [num_actions])) # a 3-D array

show_every = 1000

for eps in range(episodes):

show = false

done = False

dstate = cont2Discrete(env.reset())

if(eps%show_every==0):

show = true

while not done:

action = np.argmax(q_table[dstate])

new_state, reward, done, _ = env.step(action)

new_dstate = cont2Discrete(new_state)

if not done:

current_qval = q_table[dstate + (action,)]

max_future_qval = np.max(q_table[new_dstate])

new_qval = (1-learning_rate)*current_qval + learning_rate*(reward+ gamma*max_future_qval)

q_table[dstate+(action,)] = new_qval

elif new_state[0]>=env.goal_position:

q_table[dstate+(action,)]=0 # 0 is the reward

dstate = new_dstate

if(show):

env.render()

env.close()

I will go through the code quickly:

Firstly, we have created a gym environment. Then we have discretized the observation space into a 30x30 grid. For each episode, we are starting with a random state by doing env.reset, then we are checking whether the new state is the required state or not, if it is not then we are updating the q-value according to the above-given equation and if it is the goal_state, we are updating the q-value with a zero. For every 1000 episodes, we are showing the trace of the car.

I trained the model for 5000 epochs. The agent learned to reach the target between 1000-2000 episodes. The quicker it reaches the flag, the better the model has been trained.

originally published on my blog.

In my previous blog post, I had gone through the training of an agent for a mountain car environment provided by gym library. But what if we need the training for an environment which is not in gym? Sometimes we will need to create our own environments. This blog is all about creating a custom environment from scratch.

The environment which we will be creating here will be a grid containing two policemen, one thief and one bag of gold. The goal of the thief is to get the bag without being caught by the policemen.

All the parameters for training the model will be similar to that of the earlier post, except some codes for the custom environment.

Now, let us write a python class for our environment which we will call a grid.

import numpy as np

from PIL import Image

import cv2

import pickle

import time

SIZE = 10

class Grid:

def __init__(self,size=SIZE):

self.x = np.random.randint(0, size)

self.y = np.random.randint(0, size)

def subtract(self, other):

return (self.x-other.x, self.y-other.y)

def isequal(self, other):

if(self.x-other.x==0 and self.y-other.y==0):

return True

else:

return False

def action(self, choice):

'''

Gives us 8 total movement options. (0,1,2,3,4,5,6,7)

'''

if choice == 0:

self.move(x=1, y=1)

elif choice == 1:

self.move(x=-1, y=-1)

elif choice == 2:

self.move(x=-1, y=1)

elif choice == 3:

self.move(x=1, y=-1)

elif choice == 4:

self.move(x=1,y=0)

elif choice == 5:

self.move(x=0, y=1)

elif choice == 6:

self.move(x=-1, y=0)

elif choice == 7:

self.move(x=0, y=-1)

def move(self, x=False, y=False):

if not x:

self.x += np.random.randint(-1, 2)

else:

self.x += x

if not y:

self.y += np.random.randint(-1,2)

else:

self.y += y

if self.x<0:

self.x=0

if self.x>=SIZE:

self.x = SIZE-1

if self.y<0:

self.y=0

if self.y>=SIZE:

self.y = SIZE-1

Now that we have defined our grid, we will assign one grid to each of the policemen, thief and the bag of gold. One more thing to note is that we will give a negative penalty to the thief when it touches the police and some positive reward when it touches the gold bag. In this case, our observation space will be the difference of coordinates of the thief from each of the policemen and the gold bag. We will also assign some colours for the police, thief and gold bag.

Let us write the code for training our game.

episodes = 10000

move_penalty = -1

police_penalty = -100

gold_penalty = 50

show_every = 1000

learning_rate = 0.2

gamma = 0.9

# for coloring

thief_key = 1

police_key = 2

gold_key = 3

# RGB color coding

d = {1:(255, 0, 0), 2:(0,255,0), 3:(0,0,255)}

q_table = {}

for a in range(-SIZE+1, SIZE):

for b in range(-SIZE+1, SIZE):

for c in range(-SIZE+1, SIZE):

for d in range(-SIZE+1, SIZE):

for e in range(-SIZE+1, SIZE):

for f in range(-SIZE+1, SIZE):

q_table[((a,b),(c,d),(e,f))]= [np.random.uniform(-8, 0) for i in range(8)]

for eps in range(episodes):

police1 = Grid()

police2 = Grid()

gold = Grid()

thief = Grid()

show = False

if(eps%show_every==0):

show = True

for i in range(200):

dstate = (police1.substract(thief), police2.subtract(thief), gold.subtract(thief))

action = np.random.randint(0,8)

thief.action(action)

if(thief.x==police1.x and thief.y==police1.y):

reward = police_penalty

elif(thief.x==police2.x and thief.y==police2.y):

reward = police_penalty

elif(thief.x==gold.x and thief.y==gold.y):

reward = gold_penalty

else:

reward = move_penalty

new_dstate = (police1.substract(thief), police2.subtract(thief), gold.subtract(thief))

max_future_qval = np.max(q_table[new_dstate])

current_qval = q_table[dstate][action]

if reward == gold_penalty:

new_qval = gold_penalty

else:

new_qval = (1 - learning_rate) * current_qval + learning_rate * (reward + gamma * max_future_qval)

q_table[dstate][action] = new_qval

if(show):

env = np.zeros((SIZE, SIZE, 3), dtype=np.uint8) # 3 is the number of channels for RGB image

env[gold.x][gold.y] = d[gold_key]

env[thief.x][thief.y] = d[thief_key]

env[police1.x][police1.y] = d[police_key]

env[police2.x][police2.y] = d[police_key]

image = Image.fromarray(env, 'RGB')

image = image.resize((300, 300))

cv2.imshow("ENV", np.array(image))

if reward == gold_penalty or reward == police_penalty:

if cv2.waitKey(500) and 0xFF == ord('q'):

break

else:

if cv2.waitKey(1) & 0xFF == ord('q'):

break

if reward == gold_penalty or reward == police_penalty:

break

I will quickly go through the code once again. Initially, I have defined the different penalties, number of episodes, learning rate and the discount factor. Then I created a q-table in the form of a dictionary with random values in it for all the 8 possible actions. Then I have written the code for training the model in which for each episode, the thief takes a maximum of 200 steps and stops before if it reaches the gold bag. After every 1000 episodes, I am showing the trace of the path of the thief using cv2 and PIL image library. One important thing to note was that what happens if the thief reaches one of the policemen or the gold bag. It may cause a pause, and that’s why we are breaking the loop in case those things occur.

Note: The environment we just created requires a q-table, which will be huge in memory, and thus it may take too much time to create the q-table itself. The recommended solution is to reduce the number of policemen or the grid size so that you can visualise it in a usual computer. If you already have the q-table, it is recommended to save it as a binary pickle file and load while training the model.

I have trained the model for 1 policeman and 5X5 grid and the results per 100 episodes are as shown in the video below:

So in this video, the blue colour box is the thief, green is the gold and red is the police. Whenever you see a combination of red and blue but no green, this means the thief has reached the gold bag.

Hope the second blog in the series was fun too. Keep Learning, Keep Sharing 😊

To learn more about AI and machine learning, visit the Oracle AI page. You can also try Oracle Cloud for free!

No comments:

Post a Comment