Posted by Laurence Moroney, Developer Advocate

Thanks to everyone who joined our virtual TensorFlow Dev Summit 2020 livestream! While we couldn’t meet in person, we hope we were able to make the event more accessible than ever.

We’re recapping all the new updates we shared in this blog post. You can find the recording of the keynote and talks on the TensorFlow YouTube channel.

In 2.2, we’re building on the momentum of last year. We've been focusing on performance, working on the execution core to make improvements to performance, and make it easier to measure performance more consistently. We’re also introducing new tools to help measure performance, like the new Performance Profiler. And, we've increased the compatibility of the TensorFlow ecosystem, including key libraries like TensorFlow Extended. Lastly, we are keeping the core API the same, only making additive changes, so you can be confident when porting your code from 1.x. See the release notes for more.

For researchers, there are libraries for pushing the state of the art of ML. For applied ML engineers or data scientists, you get tools that help your models have real-world impact. Finally, there are libraries in the ecosystem that can help create better AI experiences for your users, from fairness to optimization, from massive scale to tiny hardware.

All of this is underscored by what the TensorFlow community contributes to the ecosystem and our common goals of building AI responsibly.

For example, consider the recently released T5 paper where we wanted to explore the limits of transfer learning with a unified text-to-text transformer. Here, the T5 model, pretrained on the C4 dataset (available in TensorFlow Datasets), was able to achieve state-of-the-art results on many common NLP benchmarks which also being flexible enough to be fine-tuned to a variety of downstream tasks such as translation, grammar checking and summarization.

In addition, we saw hundreds of papers and posters at NeurIPS last year that used TensorFlow.

We’re always looking to improve the experience for researchers, so we’re highlighting some important features.

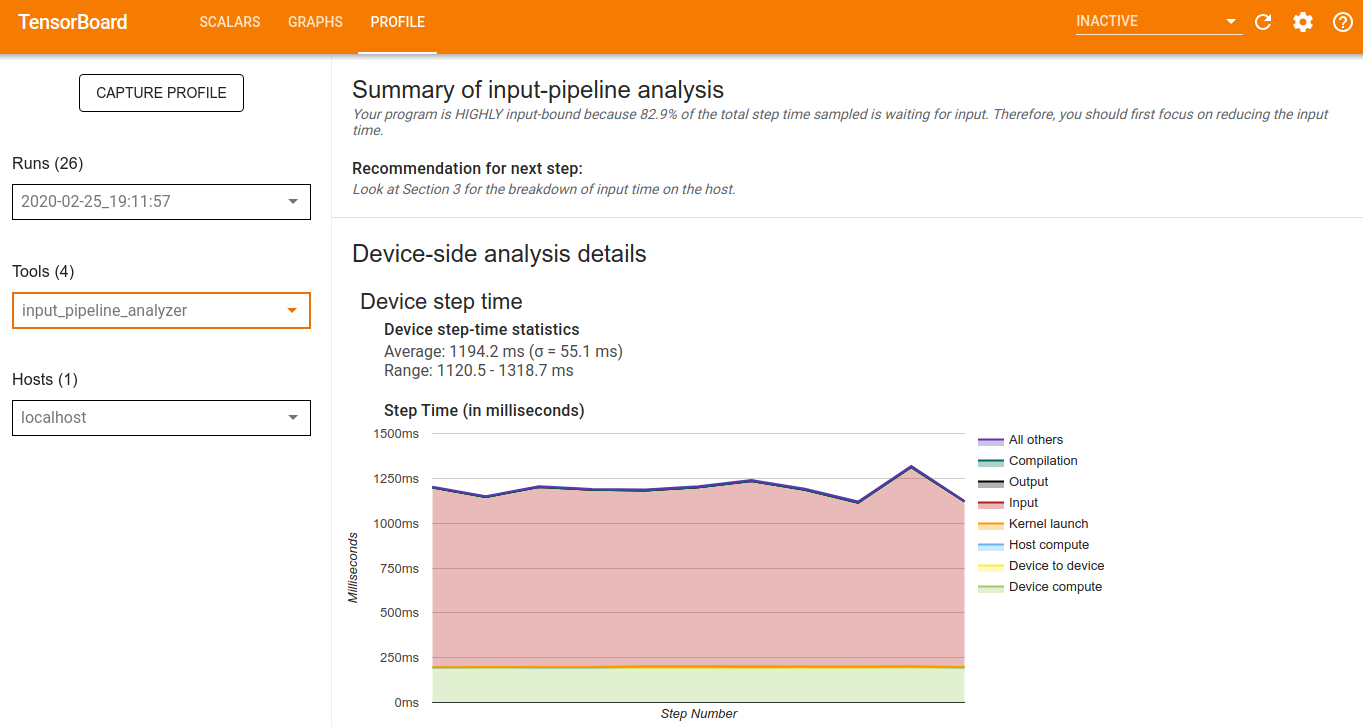

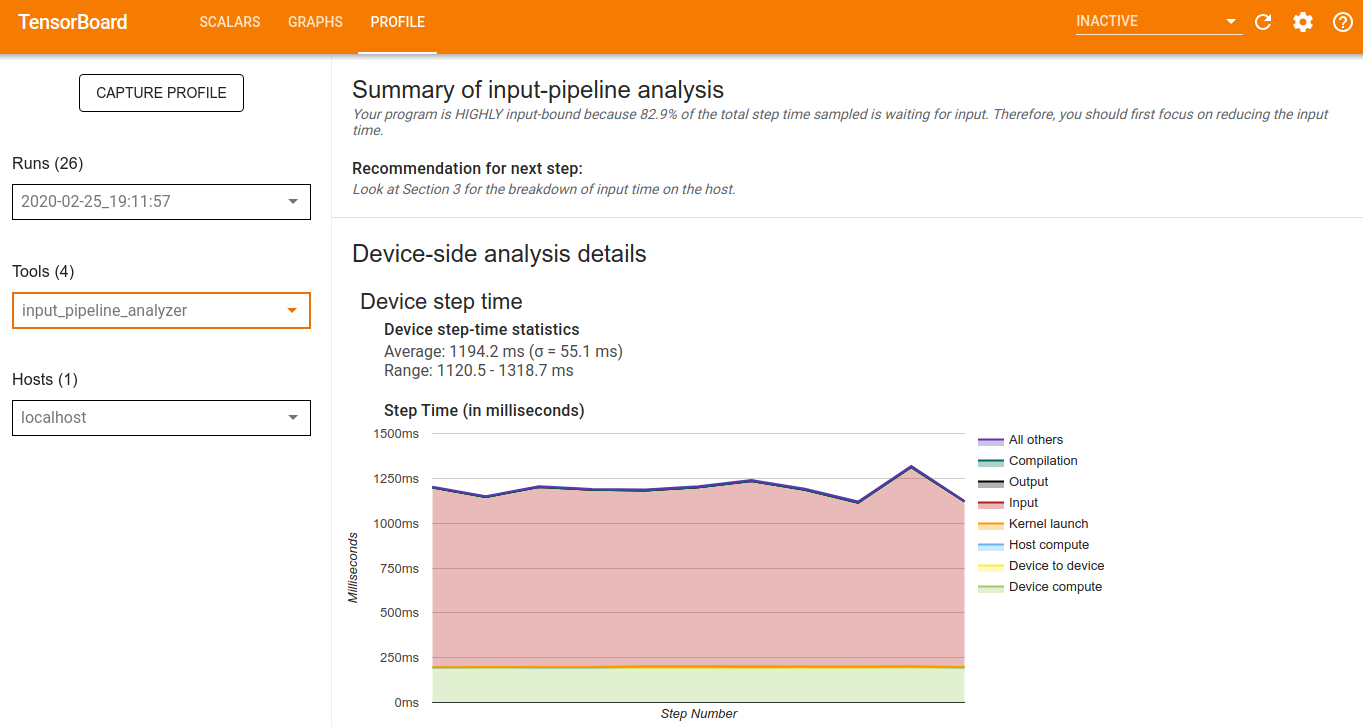

First, we’ve gotten positive feedback from researchers on TensorBoard.dev, a tool we launched last year that lets you upload and share experiment results by URL. The URL allows for quickly visualizing hyperparameter sweeps. Second, we introduced a new Performance Profiler tool via TensorBoard that provides consistent monitoring of model performance.

Researchers also told us they want to make changes to models quickly and scale their training to accelerated hardware, in particular with managing the extraction, transformation and loading of data. TensorFlow Datasets in the tf.data API were created with this in mind to give researchers quick access to state of the art datasets. Whether you use these or personal datasets, the underlying TFRecord-based architecture allows you to build parallelization with tf.distribute to build the optimum input pipeline to make the most efficient use of GPU- or TPU-based training infrastructure.

Finally, the TensorFlow ecosystem contains add-ons and extensions that can enhance your experimentation and work with your favorite open-source libraries. Extensions like TF Probability and TF Agents work with the latest version of TensorFlow. Experimental libraries like JAX from Google Research are composable with TensorFlow, such as using TensorFlow data pipelines to input data into JAX. For those of you who are really heading towards the cutting edge of computing, check out TensorFlow Quantum, a library for rapid prototyping of hybrid quantum-classical ML models.

We have heard from users about hyperparameter tuning, and as of early last year Google open-sourced Keras Tuner and encourage you to take a look. We're also working on a set of new, lighter-weight preprocessing layers, now in experimental.

In the talk TensorFlow Hub: Models for everyone, Sandeep spoke about how we're continuing to expand TensorFlow Hub. Today we have more than a 1,000 models available with documentation, and code snippets. You can download, use, retrain, and fine-tune these models, sometimes as easily as just adding them as Keras layers. Some of the publishers on Hub have created custom components to highlight their amazing work where you can try out a model on your own image or audio clip directly in the browser, all with nothing to install.

To help you build your models, Google Colabratory is a hosted Python notebook environment that gives you access to GPU and TPU resources that can be used for training TensorFlow models. In addition to the free tier, we recently launched the new Colab Pro service, giving access to faster GPUs, longer runtimes, and more memory for a monthly subscription cost. You can check out some of the benefits in a Colab notebook here. At the Dev Summit Tim Novikoff gave a fun talk on Making the Most of Colab: Tips and Tricks for TensorFlow users.

We also know that going from a trained model to a business-critical process goes far beyond just creating a model architecture, training it, and testing it. Building for production at scale involves complex interconnected management processes to keep the model running, relevant and updated. With that in mind, we created TensorFlow Extended (TFX). An example high-level architecture of a production system built using TFX is show below:

To make it even easier to use TensorFlow in production, we’ve launched Google Cloud AI Platform Pipelines. These are designed to make it easy for you to build end-to-end production pipelines like the one we show above, built on top of KubeFlow, TFX, and Google Cloud. You can use AI Platform Pipelines on GCP with TensorFlow Enterprise, a custom build of TensorFlow with many optimizations and enterprise grade long term support. We also have a tutorial to help you use Cloud AI Platform Pipelines with TFX.

For TF Lite, we have a host of new features. We’re also adding Android Studio Integration, available in the Canary Channel very soon, that will enable simple drag and drop into android studio - and then automatically generate the Java classes for the TF Lite model with just a few clicks. We've also made it so model authors can provide a metadata spec when creating and converting models, making it easier for users of the model to understand how it works and use it in production. We also have our new Model Maker, which lets developers fine-tune pre-existing models without doing complicated ML. We’re announcing Core ML Delegation through Apple Neural Engine, to accelerate floating point models in the latest iPhones and iPads using TensorFLow Lite. Finally, we continue to focus on performance improvements to provide the fastest execution speeds for your models on mobile phone CPUs, GPUs, DSPs and NPUs.

With TensorFlow.js, we continue to make it easier than ever for JavaScript developers and web developers to build and use machine learning. We announced several new models for face and hand tracking and NLP that are ready to use in your web or Node.js application. We also launched performance improvements, new backend, and more seamless integration with TensorFlow 2.0 model training.

Finally, we previewed the new TensorFlow Runtime (TFRT) that speeds up core loops, and will be available in open source soon. We also discussed MLIR, our shared compiler infrastructure that unifies the infrastructure that runs machine learning computations. As a concrete change, in February we released the TF → TF Lite Converter, which should provide better error messages, support for control flow, and a unified quantization workflow. These two features together, TFRT and MLIR, allow us to support a robust selection of hardware and low-level libraries, and make it easy to support more.

Thanks to everyone who joined our virtual TensorFlow Dev Summit 2020 livestream! While we couldn’t meet in person, we hope we were able to make the event more accessible than ever.

We’re recapping all the new updates we shared in this blog post. You can find the recording of the keynote and talks on the TensorFlow YouTube channel.

TensorFlow 2.2

After the release of TensorFlow 2.0 at last year’s Developer Summit, we have been working hard with our users and community to bring more enhancements and features, and this week posted a release candidate of TensorFlow 2.2.

In 2.2, we’re building on the momentum of last year. We've been focusing on performance, working on the execution core to make improvements to performance, and make it easier to measure performance more consistently. We’re also introducing new tools to help measure performance, like the new Performance Profiler. And, we've increased the compatibility of the TensorFlow ecosystem, including key libraries like TensorFlow Extended. Lastly, we are keeping the core API the same, only making additive changes, so you can be confident when porting your code from 1.x. See the release notes for more.

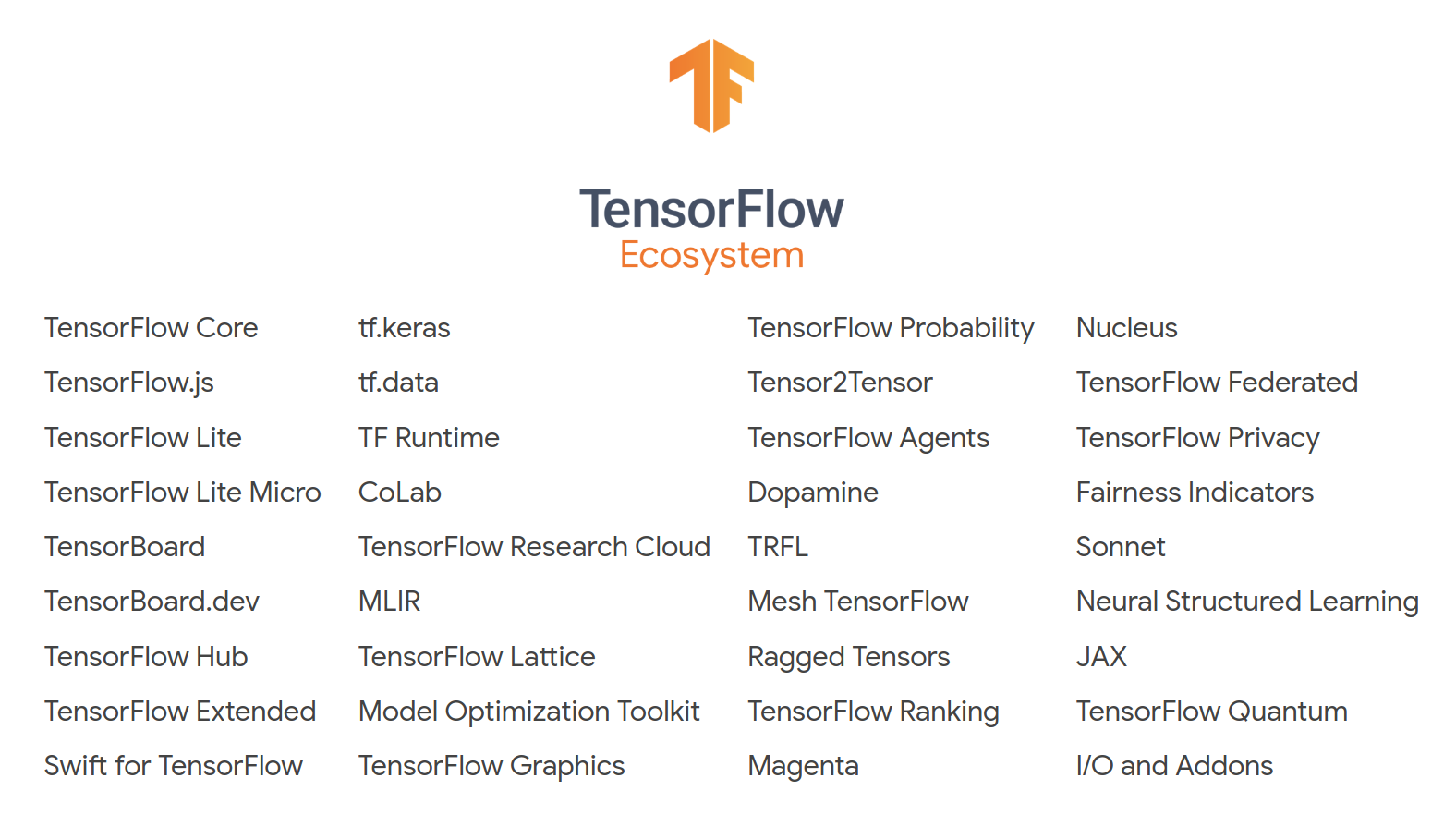

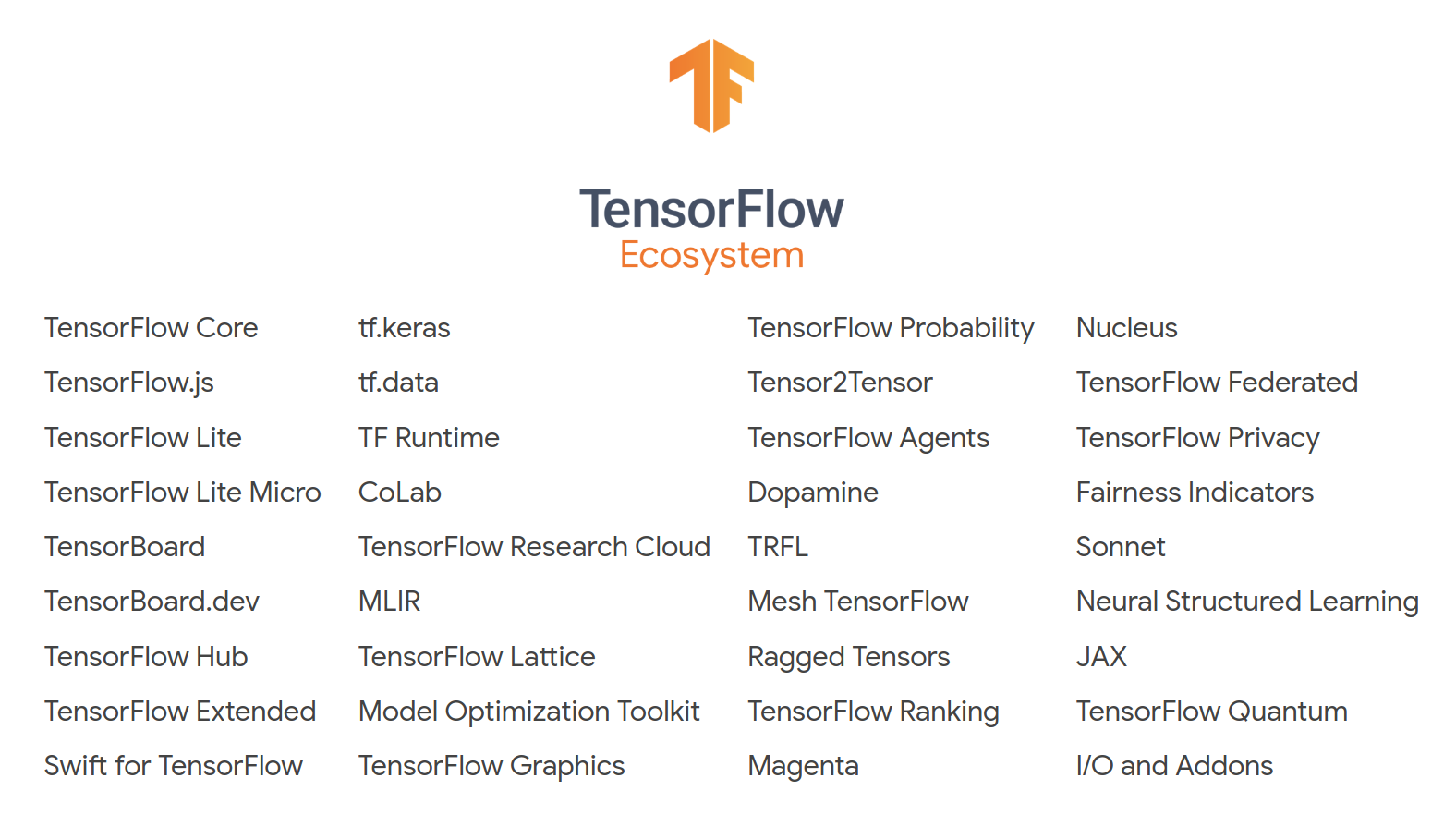

The TensorFlow Ecosystem

TensorFlow 2.2 is just one part of a much bigger, and growing ecosystem of libraries and extensions that help you accomplish your machine learning goals. There’s a tool for all kinds of developers!

For researchers, there are libraries for pushing the state of the art of ML. For applied ML engineers or data scientists, you get tools that help your models have real-world impact. Finally, there are libraries in the ecosystem that can help create better AI experiences for your users, from fairness to optimization, from massive scale to tiny hardware.

All of this is underscored by what the TensorFlow community contributes to the ecosystem and our common goals of building AI responsibly.

TensorFlow for Research

TensorFlow is being used to push the state-of-the-art of ML in many different subfields.For example, consider the recently released T5 paper where we wanted to explore the limits of transfer learning with a unified text-to-text transformer. Here, the T5 model, pretrained on the C4 dataset (available in TensorFlow Datasets), was able to achieve state-of-the-art results on many common NLP benchmarks which also being flexible enough to be fine-tuned to a variety of downstream tasks such as translation, grammar checking and summarization.

|

| The T5 model uses the latest in transfer learning to convert every language problem into a text-to-text format |

In addition, we saw hundreds of papers and posters at NeurIPS last year that used TensorFlow.

We’re always looking to improve the experience for researchers, so we’re highlighting some important features.

First, we’ve gotten positive feedback from researchers on TensorBoard.dev, a tool we launched last year that lets you upload and share experiment results by URL. The URL allows for quickly visualizing hyperparameter sweeps. Second, we introduced a new Performance Profiler tool via TensorBoard that provides consistent monitoring of model performance.

Researchers also told us they want to make changes to models quickly and scale their training to accelerated hardware, in particular with managing the extraction, transformation and loading of data. TensorFlow Datasets in the tf.data API were created with this in mind to give researchers quick access to state of the art datasets. Whether you use these or personal datasets, the underlying TFRecord-based architecture allows you to build parallelization with tf.distribute to build the optimum input pipeline to make the most efficient use of GPU- or TPU-based training infrastructure.

Finally, the TensorFlow ecosystem contains add-ons and extensions that can enhance your experimentation and work with your favorite open-source libraries. Extensions like TF Probability and TF Agents work with the latest version of TensorFlow. Experimental libraries like JAX from Google Research are composable with TensorFlow, such as using TensorFlow data pipelines to input data into JAX. For those of you who are really heading towards the cutting edge of computing, check out TensorFlow Quantum, a library for rapid prototyping of hybrid quantum-classical ML models.

Applied ML

We’ve been humbled by the number of companies, all over the globe, of various sizes, that have trusted TensorFlow with their machine learning workloads.We have heard from users about hyperparameter tuning, and as of early last year Google open-sourced Keras Tuner and encourage you to take a look. We're also working on a set of new, lighter-weight preprocessing layers, now in experimental.

In the talk TensorFlow Hub: Models for everyone, Sandeep spoke about how we're continuing to expand TensorFlow Hub. Today we have more than a 1,000 models available with documentation, and code snippets. You can download, use, retrain, and fine-tune these models, sometimes as easily as just adding them as Keras layers. Some of the publishers on Hub have created custom components to highlight their amazing work where you can try out a model on your own image or audio clip directly in the browser, all with nothing to install.

To help you build your models, Google Colabratory is a hosted Python notebook environment that gives you access to GPU and TPU resources that can be used for training TensorFlow models. In addition to the free tier, we recently launched the new Colab Pro service, giving access to faster GPUs, longer runtimes, and more memory for a monthly subscription cost. You can check out some of the benefits in a Colab notebook here. At the Dev Summit Tim Novikoff gave a fun talk on Making the Most of Colab: Tips and Tricks for TensorFlow users.

We also know that going from a trained model to a business-critical process goes far beyond just creating a model architecture, training it, and testing it. Building for production at scale involves complex interconnected management processes to keep the model running, relevant and updated. With that in mind, we created TensorFlow Extended (TFX). An example high-level architecture of a production system built using TFX is show below:

To make it even easier to use TensorFlow in production, we’ve launched Google Cloud AI Platform Pipelines. These are designed to make it easy for you to build end-to-end production pipelines like the one we show above, built on top of KubeFlow, TFX, and Google Cloud. You can use AI Platform Pipelines on GCP with TensorFlow Enterprise, a custom build of TensorFlow with many optimizations and enterprise grade long term support. We also have a tutorial to help you use Cloud AI Platform Pipelines with TFX.

Deployment

TensorFlow keeps focusing on making models and other computations run efficiently on every kind of hardware.For TF Lite, we have a host of new features. We’re also adding Android Studio Integration, available in the Canary Channel very soon, that will enable simple drag and drop into android studio - and then automatically generate the Java classes for the TF Lite model with just a few clicks. We've also made it so model authors can provide a metadata spec when creating and converting models, making it easier for users of the model to understand how it works and use it in production. We also have our new Model Maker, which lets developers fine-tune pre-existing models without doing complicated ML. We’re announcing Core ML Delegation through Apple Neural Engine, to accelerate floating point models in the latest iPhones and iPads using TensorFLow Lite. Finally, we continue to focus on performance improvements to provide the fastest execution speeds for your models on mobile phone CPUs, GPUs, DSPs and NPUs.

With TensorFlow.js, we continue to make it easier than ever for JavaScript developers and web developers to build and use machine learning. We announced several new models for face and hand tracking and NLP that are ready to use in your web or Node.js application. We also launched performance improvements, new backend, and more seamless integration with TensorFlow 2.0 model training.

Finally, we previewed the new TensorFlow Runtime (TFRT) that speeds up core loops, and will be available in open source soon. We also discussed MLIR, our shared compiler infrastructure that unifies the infrastructure that runs machine learning computations. As a concrete change, in February we released the TF → TF Lite Converter, which should provide better error messages, support for control flow, and a unified quantization workflow. These two features together, TFRT and MLIR, allow us to support a robust selection of hardware and low-level libraries, and make it easy to support more.

Community

Of course, community is at the heart of everything we do at TensorFlow. There are so many ways that you can get involved! For example:- TensorFlow User Groups are a rapidly growing community! In just 7 months since we launched, there are 73 User groups globally, the biggest in Korea with 46,000+ members. After the TensorFlow Roadshows in 2019, we launched the first 2 TFUGs in LATAM, in Brazil and Argentina. We’re always looking to encourage more, so, if you want to form one in your area, please reach out at tfug-help@tensorflow.org!

- We also have a number of Special Interest Groups dedicated to a variety of topics. Our latest addition, on Graphics will be available at the end of March. To learn more about SIGs and how they work, check out the playbook.

- Finally, if you are a thought leader in machine learning and TensorFlow, look into becoming a Google Developer Expert, who actively support developers, companies, and tech communities by speaking at events, publishing content

Education

If you are a university or other education organization we have a program to help you create educational content to teach machine learning with TensorFlow. We’ll support you with a variety of resources to help you succeed. We have published a request for proposals for financial sponsorship to help with widening access to traditionally underrepresented communities in AI, too. Details can be found on the TensorFlow Blog. If you want to learn TensorFlow there are a number of options available to you:- We created a TensorFlow: In Practice specialization at Coursera with deeplearning.ai, giving you an introduction to how to build models for Computer Vision, NLP, Sequence Modelling and more.

- We followed this up with TensorFlow: Data and Deployment, where you can learn how to use TensorFlow.js, TensorFlow Lite, TensorFlow Serving, and more.

- We’ve updated the popular Machine Learning Crash Course for TensorFlow 2.0.

- We've published an Intro to Deep Learning Course at Udacity and also an Introduction to TensorFlow Lite course.

TensorFlow Developer Certificate

We’re excited to launch an assessment of your TensorFlow coding and model creation skills that will lead to an official certificate of your prowess as a TensorFlow Developer! In an effort to widen access to people of diverse backgrounds, experiences, and perspectives, we're offering a limited number of stipends for the educational material and/or the exam cost. Details are now available at tensorflow.org/certificate. You’ll be tested in your ability to create models all the way from simple linear regression through more advanced scenarios such as Computer Vision, NLP and Sequence modelling. The syllabus from the deeplearning.ai TensorFlow: In Practice specialization at Coursera will help you prepare for the assessment. Once you've earned the certificate you can share it on LinkedIn, GitHub and the new TensorFlow Certificate Network!

Wrap up

As you can see, it’s been a busy year, and a great time to be a TensorFlow Developer! To learn more about TensorFlow, check out tensorflow.org, read the blog, follow us on social, and don’t forget to subscribe to our YouTube Channel! For all the sessions at TensorFlow Dev Summit 2020, check out our playlist on YouTube. For your convenience here are direct links to each of the sessions also:- Keynote

- Learning to read with TensorFlow and Keras

- TensorFlow Hub: Making Model Discovery Easy

- Collaborative ML with TensorBoard.dev

- TF2.x on Kaggle

- Performance Profiling in TensorFlow 2

- Research with TensorFlow

- Differentiable convex optimization layers

- Scaling TensorFlow data processing with tf.data

- Scaling TensorFlow 2 models to multi-worker GPUs

- Making the most of Colab

- MLIR: Accelerating TF with compilers

- TFRT: A new TensorFlow Runtime

- TFX: Production ML with TensorFlow in 2020

- TensorFlow Enterprise: Productionizing TensorFlow with Google Cloud

- TensorFlow.js: ML for the Web and beyond

- TensorFlow Lite: ML for mobile and IoT devices

- TensorFlow and ML from the trenches: The innovation experience center at JPL

- Getting involved in the TF community

- Responsible AI with TensorFlow

- TensorFlow Quantum: A software platform for hybrid quantum-classical ML

Next post

Introducing the TensorFlow Developer Certificate!

Posted by Alina Shinkarsky, on behalf of the TensorFlow Team

In the AI world today, more and more companies are looking to hire machine learning talent, and simultaneously, an increasing number of students and developers are looking for ways to gain and showcase their ML knowledge with formal recognition. In addition to the courses and learning resources available online, we want to help developers showcase their ML proficiency and help companies hire ML developers to solve challenging problems.

This is why we are excited to launch the TensorFlow Developer Certificate, which provides developers around the world the opportunity to showcase their skills in ML in an increasingly AI-driven global job market. This certificate in TensorFlow development is intended as a foundational certificate for students, developers, and data scientists who want to demonstrate practical machine learning skills through building and training of basic models using TensorFlow. This level one certificate exam tests a developer’s foundational knowledge of integrating machine learning into tools and applications. The certificate program requires an understanding of building basic TensorFlow models using Computer Vision, Sequence modeling, and Natural Language Processing.

Once you pass the exam, you will receive an official TensorFlow Developer Certificate and badge to showcase your skill set and share on your CV and social networks such as LinkedIn. You will also be invited to join our credential network for recruiters seeking entry-level TensorFlow developers. This is only the beginning; as this program scales, we are eager to add certificate programs for more advanced and specialized TensorFlow practitioners.

Learn more about the TensorFlow Developer Certificate on our website, including information on exam criteria, exam cost, and a stipend to ensure taking this certificate exam is accessible.

We look forward to growing this community of TensorFlow certificate recipients!

In the AI world today, more and more companies are looking to hire machine learning talent, and simultaneously, an increasing number of students and developers are looking for ways to gain and showcase their ML knowledge with formal recognition. In addition to the courses and learning resources available online, we want to help developers showcase their ML proficiency and help companies hire ML developers to solve challenging problems.

Once you pass the exam, you will receive an official TensorFlow Developer Certificate and badge to showcase your skill set and share on your CV and social networks such as LinkedIn. You will also be invited to join our credential network for recruiters seeking entry-level TensorFlow developers. This is only the beginning; as this program scales, we are eager to add certificate programs for more advanced and specialized TensorFlow practitioners.

Learn more about the TensorFlow Developer Certificate on our website, including information on exam criteria, exam cost, and a stipend to ensure taking this certificate exam is accessible.

We look forward to growing this community of TensorFlow certificate recipients!

Build, deploy, and experiment easily with

No comments:

Post a Comment