Computers. They’re tricky things — some days you can’t get enough, other days you have more than you need. You might think you’re just installing a new open source project, but four hours later you’re still wrangling the installation manager. You’ve got a great model, but no framework for building that into an application. Now, let’s reimagine that experience, but using a tool built specifically for you. Welcome, Amazon SageMaker.

Amazon SageMaker is a fully-managed machine learning solution coming from AWS. It decouples your environments across developing, training and deploying, letting you scale these separately and optimize your spend and time. Tens of thousands of developers across the world are adopting SageMaker in various ways, sometimes for the end-to-end flow, other times to scale up training jobs, others for the dead simple RESTful API integration. Here I’ll walk you through the major aspects of SageMaker classic, as I call it, or the fundamental elements of SageMaker.

Amazon SageMaker is a fully-managed machine learning solution coming from AWS. It decouples your environments across developing, training and deploying, letting you scale these separately and optimize your spend and time. Tens of thousands of developers across the world are adopting SageMaker in various ways, sometimes for the end-to-end flow, other times to scale up training jobs, others for the dead simple RESTful API integration. Here I’ll walk you through the major aspects of SageMaker classic, as I call it, or the fundamental elements of SageMaker.

SageMaker Provides a Managed Development Environment

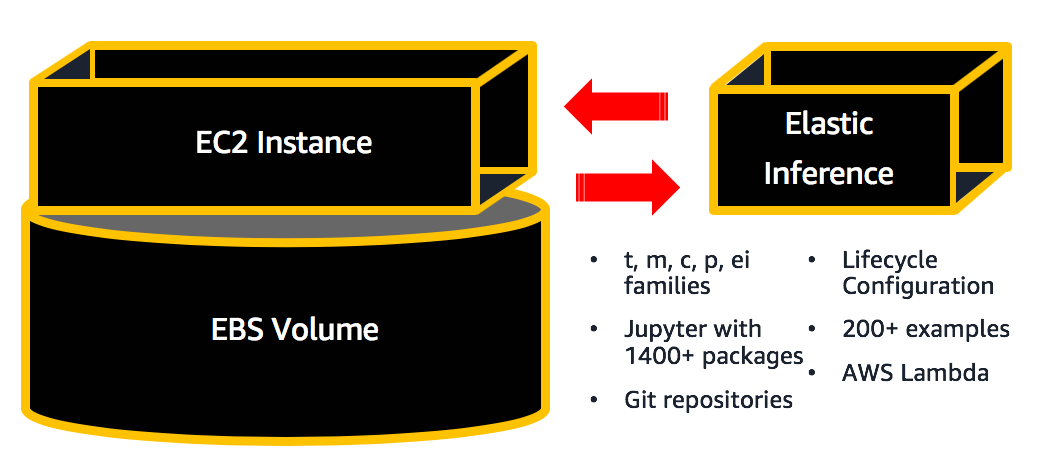

SageMaker starts with a notebook instance, this is an EC2 instance dedicated to running Jupyter, your environments, and any extra code you need for feature engineering. Notebook instances come automatically configured with your AWS credentials, such as boto3 and the aws cli, so you can easily connect to your data in S3, Redshift, RDS, DynamoDB, Aurora, or any other location with just a few lines of code. For extending access from your notebook instance to other AWS resources, just make sure to update the ExecutionRole assigned to your notebook instance.

Notebook Instances on Amazon SageMaker

SageMaker provides fully-managed EC2 instances running Jupyter, with 10+ environments, 1400+ packages, and hundreds of examples.

It’s best to start your notebook on a smaller EC2 instance, generally the ml.t2.medium is a good choice. This is the absolute lowest dollar amount per hour you can get for a notebook instance.

But once you’ve started diving into your feature engineering, when you realize you actually need a bigger instance or more disk space, you can easily resize the EC2 instance hosting your notebook. You’ll need to turn it off, update the settings, then turn it back on again. 7 minutes round-trip, but well worth the payoff.

Don’t forget to turn your notebook instance off, as the cost is per hour, not per use. Usually it’s best to implement a Lambda function to turn these off automatically, either based on a time of the day, or by notebook utilization itself.

SageMaker Dedicates Clusters for Each of Your Models

Now here’s the fun part — you can get dedicated EC2 instances for each of your models while they train. These are called training jobs, and you can configure them whether you’re using one of the 17 built-in algorithms, bringing your own model in a Docker container, or using AWS-managed containers under script mode.

Training Jobs on Amazon SageMaker

All of the details about your training job are sent to CloudWatch, and your model artifact is stored in S3 on completion.

Each training job is configured with an estimator on SageMaker, and there are zero restrictions on needing to use one of the 17 built-in algorithms. They may offer some time advantages, because you’re writing less code by using them, but if you prefer to bring your own model with TensorFlow, MxNet, PyTorch, Sci-kit Learn, or any framework, SageMaker offers examples to see how that works.

Every training job is logged in the AWS console, so you can easily see which dataset you used, where it lives, where the model is, and the objective result, even 6+ months after you finished.

SageMaker Automatically Creates a RESTful API Around Your Model

Trained your model somewhere else? No worries! You can actually bring any pre-trained model and host it on SageMaker — as long as you can get it in a Docker container, you can run it on SageMaker.

Use SageMaker to Create a RESTful API around any model

If you’re bringing a model in a framework supported by the script-mode managed containers, AWS implements the API for you.

It’ll take 7+ minutes to initialize all the resources for your endpoint, so don’t fret if you see the endpoint showing status “Pending.” If you are putting this into production in response to regular traffic, it’s best to set a minimum of two EC2 instances for a highly available API. These will live behind an AWS-managed load balancer, and behind the API endpoint itself which can connect to a Lambda function that’s receiving your application traffic.

Endpoints left on can also get pricey, so make sure to implement a Lambda function to turn these off regularly if they’re not in a production environment. Feel free to experiment here and pick an EC2 instance that’s smaller, but still robust to your needs. Endpoints come with autoscaling out of the box, you just need to configure and load-test these.

SageMaker Logs All of Your Resources

For all of your training jobs, endpoints, and hyperparameter tuning jobs, SageMaker will log these for you in the console by default. Each job emits metrics to CloudWatch, so you can view these in near real-time to monitor how your models are training. Additionally, with the advancements provided by SageMaker Studio, you can establish Experiments and monitor progress on these.

SageMaker Studio provides experiment management to easily view and track progress against projects.

After you create an experiment in SageMaker, all jobs associated with it show up in Studio with a click of a button.

SageMaker Studio was announced at re:Invent 2019, and it is still in a public preview. That means AWS is still fine-tuning the solution, and there may be some changes as they develop it. Because the preview is public, anyone with an AWS account can open up Studio in us-east-2, or Ohio, and get started.

Resources

AWS is literally exploding with resources around machine learning. There are 250+ example notebooks for SageMaker hosted on GitHub right here. Hundreds of training videos are available for free across different roles and levels of experience here. If you’d like a deep dive on any of the content in this post, I’ve personally gone through the trouble of outlining all of these features in an 11-video series, hosted here. Check it out!

SageMaker is present by default in every AWS account. You can also create your own AWS accounts and keep your experimenting inside the Free Tier.

Happy hacking! And feel free to connect on LinkedIn — I love hearing success stories of how you’re implementing SageMaker on your very own machine learning adventures, so keep them coming!

No comments:

Post a Comment