With the rise of Large Language Models (LLMs) like ChatGPT and GPT-4, many are asking if it’s possible to train a private ChatGPT with their corporate data. But is this feasible? Can such language models offer these capabilities?

In this article, I will discuss the architecture and data requirements needed to create “your private ChatGPT” that leverages your own data. We will explore the advantages of this technology and how you can overcome its current limitations.

Disclaimer: this article provides an overview of architectural concepts that are not specific to Azure but are illustrated using Azure services since I am a Solution Architect at Microsoft.

1. Disadvantages of finetuning a LLM with your own data

Often people refer to finetuning (training) as a solution for adding your own data on top of a pretrained language model. However, this has drawbacks like risk of hallucinations as mentioned during the recent GPT-4 announcement. Next to that, GPT-4 has only been trained with data up to September 2021.

Common drawbacks when you finetune a LLM;

- Factual correctness and traceability, where does the answer come from

- Access control, impossible to limit certain documents to specific users or groups

- Costs, new documents require retraining of the model and model hosting

This will make it extremely hard, close to impossible, to use fine-tuning for the purpose of Question Answering (QA). How can we overcome such limitations and still benefit from these LLMs?

2. Separate your knowledge from your language model

To ensure that users receive accurate answers, we need to separate our language model from our knowledge base. This allows us to leverage the semantic understanding of our language model while also providing our users with the most relevant information. All of this happens in real-time, and no model training is required.

It might seem like a good idea to feed all documents to the model during run-time, but this isn’t feasible due to the character limit (measured in tokens) that can be processed at once. For example, GPT-3 supports up to 4K tokens, GPT-4 up to 8K or 32K tokens. Since pricing is per 1000 tokens, using fewer tokens can help to save costs as well.

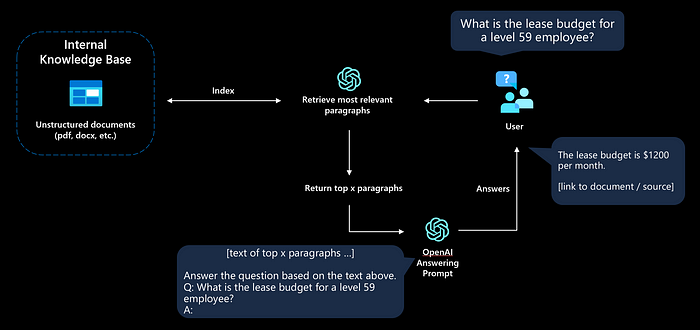

The approach for this would be as follows:

- User asks a question

- Application finds the most relevant text that (most likely) contains the answer

- A concise prompt with relevant document text is sent to the LLM

- User will receive an answer or ‘No answer found’ response

This approach is often referred to as grounding the model or Retrieval Augmented Generation (RAG). The application will provide additional context to the language model, to be able to answer the question based on relevant resources.

Now you understand the high-level architecture required to start building such a scenario, it is time to dive into the technicalities.

3. Retrieve the most relevant data

Context is key. To ensure the language model has the right information to work with, we need to build a knowledge base that can be used to find the most relevant documents through semantic search. This will enable us to provide the language model with the right context, allowing it to generate the right answer.

3.1 Chunk and split your data

Since the answering prompt has a token limit, we need to make sure we cut our documents in smaller chunks. Depending on the size of your chunk, you could also share multiple relevant sections and generate an answer over multiple documents.

We can start by simply splitting the document per page, or by using a text splitter that splits on a set token length. When we have our documents in a more accessible format, it is time to create a search index that can be queried by providing it with a user question.

Next to these chunks, you should add additional metadata to your index. Store the original source and page number to link the answer to your original document. Store additional metadata that can be used for access control and filtering.

option 1: use a search product

The easiest way to build a semantic search index is to leverage an existing Search as a Service platform. On Azure, you can for example use Cognitive Search which offers a managed document ingestion pipeline and semantic ranking leveraging the language models behind Bing.

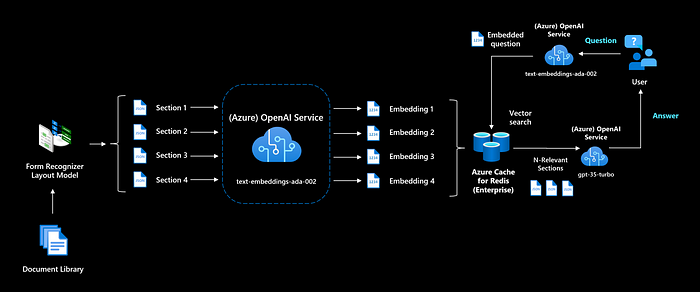

option 2: use embeddings to build your own semantic search

An embedding is a vector (list) of floating point numbers. The distance between two vectors measures their relatedness. Small distances suggest high relatedness and large distances suggest low relatedness. [1]

If you want to leverage the latest semantic models and have more control over your search index, you could use the text embedding models from OpenAI. For all your sections you will need to precompute embeddings and store them.

On Azure you can store these embeddings in a managed vector database like Cognitive Search with Vector Search (preview), Azure Cache for Redis (RediSearch) or in a open source vector database like Weaviate or Pinecone. During the application run-time you will first turn the user question into an embedding, so we can compare the cosine similarity of the question embedding with the document embeddings we generated earlier. Advanced search products like Cognitive Search can do a hybrid search where the best of both keyword search and vector search is combined.

(a deep dive on embeddings can be found on Towards Data Science)

3.2 Improve relevancy with different chunking strategies

To be able to find the most relevant information, it is important that you understand your data and potential user queries. What kind of data do you need to answer the question? This will decide how your data can be best split.

Common patterns that might improve relevancy are:

- Use a sliding window; chunking per page or per token can have the unwanted effect of losing context. Use a sliding window to have overlapping content in your chunks, to increase the chance of having the most relevant information in a chunk.

- Provide more context; a very structured document with sections that nest multiple levels deep (e.g. section 1.3.3.7) could benefit from extra context like the chapter and section title. You could parse these sections and add this context to every chunk.

- Summarization, create chunks that contain a summary of a larger document section. This will allow us to capture the most essential text and bring this all together in one chunk.

4. Write a concise prompt to avoid hallucination

Designing your prompt is how you “program” the model, usually by providing some instructions or a few examples. [2]

Your prompt is an essential part of your ChatGPT implementation to prevent unwanted responses. Nowadays, people call prompt engineering a new skill and more and more samples are shared every week.

In your prompt you want to be clear that the model should be concise and only use data from the provided context. When it cannot answer the question, it should provide a predefined ‘no answer’ response. The output should include a footnote (citations) to the original document, to allow the user to verify its factual accuracy by looking at the source.

An example of such a prompt:

"You are an intelligent assistant helping Contoso Inc employees with their healthcare plan questions and employee handbook questions. " + \

"Use 'you' to refer to the individual asking the questions even if they ask with 'I'. " + \

"Answer the following question using only the data provided in the sources below. " + \

"For tabular information return it as an html table. Do not return markdown format. " + \

"Each source has a name followed by colon and the actual information, always include the source name for each fact you use in the response. " + \

"If you cannot answer using the sources below, say you don't know. " + \

"""

###

Question: 'What is the deductible for the employee plan for a visit to Overlake in Bellevue?'

Sources:

info1.txt: deductibles depend on whether you are in-network or out-of-network. In-network deductibles are $500 for employee and $1000 for family. Out-of-network deductibles are $1000 for employee and $2000 for family.

info2.pdf: Overlake is in-network for the employee plan.

info3.pdf: Overlake is the name of the area that includes a park and ride near Bellevue.

info4.pdf: In-network institutions include Overlake, Swedish and others in the region

Answer:

In-network deductibles are $500 for employee and $1000 for family [info1.txt] and Overlake is in-network for the employee plan [info2.pdf][info4.pdf].

###

Question: '{q}'?

Sources:

{retrieved}

Answer:

"""Source: prompt used in azure-search-openai-demo (MIT license)

One-shot learning is used to enhance the response; we provide an example of how a user question should be handled and we provide sources with a unique identifier and an example answer that is composed by text from multiple sources. During runtime {q} will be populated by the user question and {retrieved} will be populated by the relevant sections from your knowledge base, for your final prompt.

Don’t forget to set a low temperature via your parameters if you want a more repetitive and deterministic response. Increasing the temperature will result in more unexpected or creative responses.

This prompt is eventually used to generate a response via the (Azure) OpenAI API. If you use the gpt-35-turbo model (ChatGPT) you can pass the conversation history in every turn to be able to ask clarifying questions or use other reasoning tasks (e.g. summarization). A great resource to learn more about prompt engineering is dair-ai/Prompt-Engineering-Guide on GitHub.

5. Next steps

In this article, I did discuss the architecture and design patterns needed to build an implementation, without delving into the specifics of the code. These patterns are commonly used nowadays, and the following projects and notebooks can serve as inspiration to help you start building such a solution.

- Azure OpenAI Service — On Your Data, new feature that allows you to combine OpenAI models, such as ChatGPT and GPT-4, with your own data in a fully managed way. No complex infrastructure or code required.

- ChatGPT Retrieval Plugin, let ChatGPT access up-to-date information. For now, this only supports the public ChatGPT, but hopefully the capability to add plugins will be added to the ChatGPT API (OpenAI + Azure) in the future.

- LangChain, popular library to combine LLMs and other sources of computation or knowledge

- Azure Cognitive Search + OpenAI accelerator, ChatGPT-like experience over your own data, ready to deploy

- OpenAI Cookbook, example of how to leverage OpenAI embeddings for Q&A in a Jupyter notebook (no infrastructure required)

- Semantic Kernel, new library to mix conventional programming languages with LLMs (prompt templating, chaining, and planning capabilities)

Eventually, you can look into extending ‘your own ChatGPT’ by linking it to more systems and capabilities via tools like LangChain or Semantic Kernel. The possibilities are endless.

Conclusion

In conclusion, relying solely on a language model to generate factual text is a mistake. Fine-tuning a model won’t help either, as it won’t give the model any new knowledge and doesn’t provide you with a way to verify its response. To build a Q&A engine on top of a LLM, separate your knowledge base from the large language model, and only generate answers based on the provided context.

If you enjoyed this article, feel free to connect with me on LinkedIn, GitHub or Twitter.

References

[1] Embeddings — OpenAI API. March 2023, https://platform.openai.com/docs/guides/embeddings

[2] Introduction— OpenAI API. March 2023, https://platform.openai.com/docs/introduction/prompts

[3] Bubeck, S., Chandrasekaran, V., Eldan, R., Gehrke, J., Horvitz, E., Kamar, E., Lee, P., Lee, Y. T., Li, Y., Lundberg, S., Nori, H., Palangi, H., Ribeiro, M. T., Zhang, Y. “Sparks of Artificial General Intelligence: Early experiments with GPT-4” (2023), arXiv:2303.12712

[4] Schick, T., Dwivedi-Yu, J., Dessì, R., Raileanu, R., Lomeli, M., Zettlemoyer, L., Cancedda, N., Scialom, T. “Toolformer: Language Models Can Teach Themselves to Use Tools” (2023), arXiv:2302.04761

[5] Mialon, G., Dessì, R., Lomeli, M., Nalmpantis, C., Pasunuru, R., Raileanu, R., Rozière, B., Schick, T., Dwivedi-Yu, J., Celikyilmaz, A., Grave, E., LeCun, Y., Scialom, T. “Augmented Language Models: a Survey” (2023),

arXiv:2302.07842

No comments:

Post a Comment