Prerequisites :-

This exam is not designed for beginners. To be successful, you must first obtain the Associate Cloud Engineer Certification. You can find more information on how to prepare for this exam in the following article.

Also it would be great if you have prior knowledge or hands on experience with migration.

About the PCA exam :-

Length: 2 hours

Exam format: 50–60 multiple choice and multiple select questions

Case studies: Each exam includes 2 case studies that describe fictitious business and solution concepts. Case study questions make up 20–30% of the exam and assess your ability to apply your knowledge to a realistic business situation. You can view the case studies on a split screen during the exam. See the Exam Guide for the 4 available case studies.

Important Topics :-

These are some of the topics that I faced during my exam and a lot of questions were challenging.

Google Kubernetes Engine ( Binary Authorization, NodePool upgrade, Anthos)

IAM ( Multiple questions were asked based on the principle of least privilege at organisation, folder and project level ).

Firebase ( Firebase Test Lab ).

Compute Engine (OS patching, Mig update Proactive and Opportunistic Mode).

Cloud Storage ( Retention Policy and LifeCycle Management, Storage Transfer Service, gsutil multi-threading and parallel uploads, data lake for batch data, gcs fuse, signed URL).

Cloud PubSub ( For Ingestion of Real Time data, Configuring export of logs to Cloud Pub/Sub).

Cloud DataFlow and Data Proc

Cloud Big Table ( Storage for Real time series data ).

Cloud Big Query ( Datawarehouse used for data analysis ).

Cloud SQL and Spanner

Cloud Transfer Appliance ( For huge volume of data transfer ).

Cloud Dedicated and Partner Interconnect, VPN , VPC peering , Shared VPC ( Several questions were asked about how to optimize costs, improve connection speed, and create a private connection between on-premises and GCP VPCs ).

Cloud DLP ( Use Data Loss Prevention before the Analysis of sensitive data ).

Cloud Datastore ( For session based application ).

Load Balancer ( Layer 7 and Layer 4)

Cloud CDN

Operations Suite ( Monitoring, Logging, Profiler, Debuger, Trace, Error Reporting ).

Cloud Composer

API Management ( Apigee, Cloud Endpoint ).

Vertex AI, AutoML, Video Intelligence API

Cloud KMS

Compliance Question (selecting the SLI for a particular application).

Google App Engine ( Versioning and Traffic Spliting feature ).

Cloud Armor ( Protection Against DDOS attack ).

NOTE:- I had two case studies in the exam and they were EHR and Mountkirk case study.

Resources for Preparation :-

These are the resources I used for the preparation of PCA exam and it took me about 6 months to do so.

Official Google Cloud Certified Professional Cloud Architect Study Guide by Dan Sullivan.

GCP Professional Cloud Architect: Google Cloud Certification by in28Minutes Official.

PCA exam Playlist by AwesomeGCP Youtube channel.

Google Cloud Tech — YouTube channel for more info on services like Cloud Storage, Big Query, Vertex AI etc.

GCP Sketch Book by Priyanka Vergadia for quick revision.

GCP Case Study solution by The Cloud Pilot YouTube channel.

Google Cloud Skills Boost aka Qwiklabs for hands on lab.

A collection of posts, videos, courses, qwiklabs, and other exam details for all exams: GitHUB repo by Satish VJ.

For mock tests and practise questions I used some websites and in my view it is super important to get used to the type of question that you will be facing before the actual exam.

- Practice questions from examtopics.

- Practice questions from gcp-examquestions.

- I used Whizlabs’ paid mock tests, which were helpful. However, the actual exam questions were much more difficult than what I had practiced. So, don’t be overconfident even if you are doing well on the mock tests.

In 2023, Data Engineering is likely to continue to be an important field as the amount of data being generated by businesses and organizations is expected to continue to grow. Data engineers will be responsible for building and maintaining the pipelines that allow this data to be collected, stored, and processed, enabling organizations to make informed decisions based on data-driven insights.

Obtaining the Google Cloud Professional Data Engineer certification can be beneficial for a number of reasons:

- Demonstrate your skills and knowledge: The certification is a way to demonstrate to potential employers or clients that you have the skills and knowledge required to work with the Google Cloud Platform as a data engineer.

- Enhance your career prospects: Many employers value certifications and may be more likely to hire or promote individuals who have them. Obtaining the Google Cloud Professional Data Engineer certification can therefore improve your career prospects.

- Stay current with industry trends: The certification exam covers the latest trends and best practices in the field of data engineering, so obtaining the certification can help you stay up-to-date with developments in the industry.

- Increase your credibility: The certification is an objective third-party validation of your skills and knowledge, which can increase your credibility with employers and clients.

- Enhance your earning potential: Data engineers with certifications may be able to command higher salaries or consulting rates due to their demonstrated expertise.

Overall, the Google Cloud Professional Data Engineer certification can be a valuable asset for individuals working in the field of data engineering.

Contents

- What is required to be known before pursuing the certification?

- Designing data processing systems

- Building and operationalizing data processing systems

- Operationalizing machine learning models

- Ensuring solution quality

- Courses and Books

- Practice Tests

- Conclusion

- References

What is required to be known before pursuing the certification?

The Google Cloud recommends at least 3 years of experience to take the exam. you need to answer 50 multiple-choice and multiple-select questions in 120 minutes and costs 200$.

The certification exam tests your knowledge and experience with GCP services/tools/applications by giving you suitable scenarios. To beat this one needs to have an understanding and working experience of the said tools/applications.

The official exam guide has divided the contents of the exam into the following four sections.

- Designing data processing systems

- Building and operationalizing data processing systems

- Operationalizing machine learning models

- Ensuring solution quality

Mastering the vast array of tools and applications offered by Google Cloud Platform (GCP) is no easy task. In this article, The article covers strategies and resources that aided me in successfully passing the GCP Professional Data Engineer exam.

- Review the exam guide and become familiar with the objectives and skills tested.

- Gain hands-on experience with the Google Cloud Platform.

- Use study materials and practice exams to supplement your learning.

- Stay current with industry trends and developments.

- Create a study plan and stick to it to stay on track with your preparation.

- Consider joining a study group or finding a study partner to help you prepare for the exam.

- Be sure to allocate sufficient time and resources for studying and preparing for the exam.

- Take advantage of resources such as forums and online communities to get support and advice from other professionals preparing for the exam.

Designing data processing systems

The section covers different essential google cloud data processing services. I have found the following services PubSub, Dataflow, Apache Beam Concepts, Dataproc, Apache Kafka Vs PubSub, Storage Transfer service, and Transfer Appliance are often covered in the exam.

Dataproc

Dataproc is google’s fully managed service and is highly scalable for running Apache spark, Apache Hadoop, Apache Flink, Presto, and other open-source frameworks. It can be used to modernize data lakes, and ETL operations, and secure data science operations at scale.

Key Points:

- Use Google Cloud storage as persistent storage needs rather than using Hadoop-compatible file systems and ensure the bucket and cluster are in the same region.

- while autoscaling, preferably scale secondary worker nodes. Use Prempteble Virtual Machines(PVMs) which are highly affordable, short-lived instances suitable for batch and fault tolerant jobs.

- Using a high percentage of PVMs may lead to failed jobs or other related issues, Google recommends using no more than 30% PVMs for secondary worker instances.

- Rigorously test your tolerant torrent jobs which are using PVMs before moving them to the production environment.

- Graceful decommission should ideally be set to be longer than the longest-running job on the cluster to cut the unnecessary cost.

Pubsub

Cloud Pubsub is a fully managed, asynchronous, and scalable messaging service that decouples services producing messages and services processing the messages with millisecond latency.

Key Points:

- Leverage schema on the topics to uniform schema throughout the messages.

- Leverage “Cloud Monitoring” to analyze different metrics related to pubsub and use alerting to monitor topics and subscriptions.

- Messages are persisted in a message store until they’s are acknowledged by subscribers.

Dataflow

Dataflow is a serverless batch and stream processing service that is reliable, horizontally scalable, fault-tolerant, and consistent. It is often used for data preparation and ETL operations.

Key Points:

- It can read data from multiple resources and can trigger multiple cloud functions in parallel to do multiple sinks in a distributed fashion.

- Leverage Cloud Dataflow connector for cloud Bigtable to use Bigtable in dataflow pipelines.

- Study windowing techniques Tumbling, Hopping, Session, and Single global windows.

- By default single global window is applied irrespective of the pipeline processing type(Batch or Stream). It is recommended to use non-global windows for stream data processing.

- Study Different types of triggers Time-based, Data-driven, and Composite triggers.

Here is one of the best documentation that helped me to learn and revise the Apache Beam concepts.

Data fusion

Cloud Data fusion is a fully managed, native data processing service at scale. It’s built on open-source core CDAP for pipeline portability and a code-free service that lets you build EL/ET and ETL solutions at scale. It has 150+ preconfigured connectors and transformations.

Key Points:

- Best suited for quick development and code-free solution and helps to avoid technical bottlenecks and code maintenance.

- It has built-in features such as end-to-end data lineage and data protection services.

Data Migration Services

Cloud Transfer Service helps to send data from on-premise to the cloud and cloud to cloud storage.

Cloud Transfer Appliance is a service that handles the one-time transfer of petabytes of data from one location to another on a highly secure disk.

Building and operationalizing data processing systems

Studying Bigquery, Cloud SQL, Cloud Spanner, Cloud Storage, and cloud Datastore documentation helps a lot to understand fine details that are often asked in the exam.

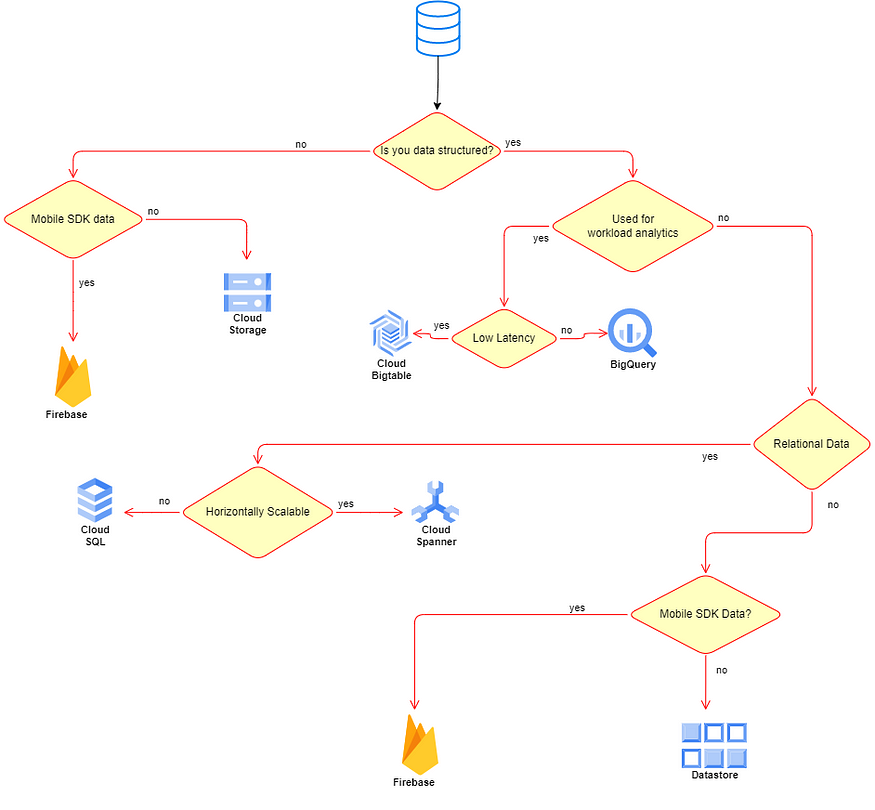

Google Cloud offers different data storage services/applications, here is a diagram that shares a quick overview of the mentioned services/applications.

Cloud Storage

It’s a managed storage system that stores data in blobs and virtually provides unlimited storage.

- Standard — No minimum storage and retrieval fees.

- Nearline — Minimum storage for 30 days and incur retrieval fees.

- Coldline — Minimum storage for 90 days and incur retrieval fees.

- Archive — Minimum storage for 365 days and incur retrieval fees.

The data stored is encrypted by default at rest and Google Cloud provided different encryption options such as Customer Supplied Encryption Keys, and Customer Managed Encryption Keys and also supports Client-side encryption.

Cloud BigTable

Cloud BigTable is a no-SQL database service with large analytical and operational workloads. It provides high throughput, no ops, and low latency. It leverages Google’s internal Colossus storage system.

Key Points:

- It’s not suitable for relational data.

- It is suitable for data on a petabyte scale and it’s not efficient storage for data less than 1 Tera Byte(TB).

- BigTable is sparse storage and it has a limit of 1000 tables per instance. it recommends having far fewer tables and having too many small tables is an anti-pattern.

- Put related columns in a column family with a short meaning full name and you can create up to 100 column families per table.

- Design row keys depending on how you intend to query the data, keep your row keys short(4KB or less) and Keep them in a human-readable format that starts with a common value and ends with a granular value.

- To increase read throughput and write throughput create read replicas and add nodes to the cluster.

- Use Key Visualizer to detect the hotspots and use separate app profiles for each workload.

- For latency-sensitive applications, google recommends that you keep storage utilization per node below 60%.

BigQuery

BigQuery is a fully managed, serverless, scalable, and analytical data warehouse. It can connect to Google Cloud Storage(GCS), BigTable, and Google Drive. It can import data from CSV, JSON, AVRO, and datastore backups. The alternative for this service is Hadoop with Hive. By default it expects all data to be in UTF-8 encoding and also supports Geospatial and ML functionality.

Key Points:

- It cannot upload/import multiple files at the same time and file size greater than 10MB and can’t load files in SQL format.

- Use partitioning and clustering to increase query performance and reduce costs.

- Don’t use LIMIT on tables with large data instead select the columns that you need.

- It loads batch data for free but streaming data increases the cost.

- Denormalize the data as much as possible to optimize the query performance and to make queries simpler.

- Use pseudo columns(_PARTITIONTIME) to retrieve the partition from a table.

- Use window functions to increase efficiency and reduce the complexity of the queries.

- Avoid self-joins and cross-joins that generate more output columns than input columns.

- Use batch update to update multiple rows instead of a point-in-time update.

- The Security can be applied on the dataset level, not on tables. Leverage authorized views to share data of particular data without sharing access to underlying tables.

- Use flat-rate pricing to reduce the cost if you’re using on-demand pricing.

- BigQuery does billing based on storage, querying, and streaming inputs.

Cloud SQL

Cloud SQL is a fully managed relational database that supports MySQL, SQL Server, and PostgreSQL. It supports low latency and can store up to 30TB. It supports the import/export of databases using mysqldump and also the import/export of CSV files.

- It supports High Availability and Failover by switching to the standby instance automatically.

- It supports replication and read replicas to reduce the load on the primary instance by offloading the read requests to the read replicas.

Cloud Spanner

Cloud Spanner is a fully managed, horizontally scaling, distributed relational database. It supports high availability and zero downtime on ACID transactions globally.

- It provides strong consistency including strongly consistent secondary indices.

- It supports interleaved tables.

- It optimizes the performance by sharding the data based on the load and size of the data automatically.

- It provides low latency and automatic replication.

- It supports both regional and multi-regional instances.

Operationalizing machine learning models

Though the ML-related questions in the exam are fewer compared to other services. I found them easy to answer and straightforward compared to others and can be answered by having a limited understanding of the following services and concepts.

The questions revolve around the GCP Services related to ML such as SparkML, BigQueryML, DialougeFlow (Chatbot), AutoML, Natural Language API, Vertex AI, DataLab, and KubeFlow.

The section covers different following Machine Learning concepts.

- Supervised learning

- Unsupervised learning

- Recommendation engines

- Neural Networks

- Optimizing ML models(Overfitting, underfitting, e.t.c)

- Hardware acceleration

Here is the crash course that I found useful while preparing for the exam.

Ensuring solution quality

Google Cloud’s Identity and Access Management (IAM) system is used to enforce necessary restrictions depending on the role and task of a group. There are three basic roles for every service Owner, Editor, and Viewer and there are predefined roles for each service, custom roles can be created when there is a need.

Use Cloud Monitoring(stack driver monitoring) and Cloud Logging to analyze, monitor, and alert on log data and events. Cloud Logging retains the following logs for 400 days and the logs other than these are retained for 30 days.

I have found the documentation helps to understand how policies and roles work throughout the GCP organization hierarchy.

Key Points:

- Use the security principle of least privilege to grant IAM roles. i.e., only give the least amount of access necessary to your resources.

- Grant roles to specific google groups instead of individual users whenever possible.

- Use service accounts while accessing the GCP services instead of individual user accounts.

- Billing Access can be provided to a project or group of projects without granting access to the underlying data/content.

Data Protection

Data loss prevention API is a fully managed scalable service that identifies the sensitive data across the cloud storage services(Cloud Storage, BigQuery, etc.). It also has the ability to mask with or without retaining the data format.

Data Catalog is a fully managed scalable metadata management service that helps to search for insightful data, understand data, take data-driven decisions, and also helps to govern the data.

Check out different legal compliance for data security. [Health Insurance Portability and Accountability Act (HIPAA), Children’s Online Privacy Protection Act (COPPA), FedRAMP, General Data Protection Regulation (GDPR)]

Courses and Books

Here are the courses and books that I found resourceful to master the GCP Data Engineering Certification.

- If you are an aspirant with less hands-on experience with GCP. The Data Engineering Learning Path is quite effective to gain knowledge and hands-on experience.

2. For a quick overview of the data engineering learning path for experienced individuals, consider going through “Google Cloud Professional Data Engineer: Get Certified 2022”.

The following books by Dan Sullivan and Priyanka Vergadia provide helpful insights into the various services and concepts covered on the GCP data engineering certification exam.

Practice Tests

Practice tests can be an important tool to familiarize yourself with the exam format, identify your area of weakness, increase your confidence and improve your test-taking strategies.

- The Official Google Cloud Certified Professional Data Engineer Study Guide book offers practice questions after each section.

- Sample questions from the Google Professional Data Engineer site.

- Dan Sullivan’s Google Cloud Professional Data Engineer Practice Tests include two simulated exams that follow the same format as the actual certification exam.

Conclusion

Preparing for the Google Cloud Professional Data Engineer exam requires a combination of knowledge, skills, and strategy. By reviewing the exam guide and becoming familiar with the objectives and skills tested, taking online courses or attending in-person training, gaining hands-on experience with the Google Cloud Platform, and using study materials and practice exams to supplement your learning, you can increase your chances of success on the exam.

With the right approach and the right resources, you can master the Google Cloud Professional Data Engineer exam in 2023.

=============================================

The Game Plan

Total duration (from 0 to certified): 1.5 months — assuming you are working full time

Total Cost: Exam Fee ($200) + Course Fee (~ $30/40): $240

1) Take A Cloud Guru’s GCP Data Engineer Course

The course contains all the high level knowledge you need to pass the exam.

Review the section reviews and the quizzes at least twice once done with the content. In my opinion, it is NOT sufficient to be fully prepared for the exam, which is why you also need to..

2) Practice Several Mock Exams on Exam Topics

You can find about 5 or 6 mock exams on Exam Topics. More so than using the questions just to check whether you get something right or wrong, the goal here is to use these to expand on the course’s knowledge and amplify by thoroughly reading the explanations to the questions and searching online for additional documentation on the topic

Repeat the above cycle for at least two times and for all of the mock exam questions. If you are short on time, focus on only the ones you get wrong.

Final tips

- Aim for a 80% pass rate on your mocks. Don’t stress if you don’t reach it, you’ll be prepared by the time the exam comes simply due to the effort and work you will have put in

- Self-Accountability is key. Getting this cert is worth it, so keep calm and keep going, you got this!

Reach out if you need further tips on passing this Exam, I’m happy to help

Thanks for reading!

=============================================

Hey everyone, I hope you all are doing well. Recently, I passed the very famous Google Cloud Professional Data Engineer certification exam. In this article, I will share the strategy and resources that helped me achieve this.

For a career in tech, subscribe to The Cloud Pilot

About the exam

The Professional Data Engineer exam assesses your ability to:

Design data processing systems

Operationalize machine learning models

Ensure solution quality

Build and operationalize data processing systems

The exam price is $200 and the duration is 2 hours. You can expect 50 questions to be answered within the given time.

My Background

I have been tinkering around Google Cloud services for the past 2 years and am working as a Cloud Infrastructure and DevOps Engineer. This ensured that my work experience also contributed to the knowledge base I possess which helped me with understanding Google Cloud at a detailed level. Since I hadn’t been working on data services, I had to make an extra effort to learn about data engineering.

Preparation Strategy

I had been preparing for all the data-related exams together for around 2 months. So I made a parallel approach to learning for the certifications.

- Visit the Google Cloud Professional Data Engineer page on Google. This will give you an overview of the exam and what is required to complete the certification.

- Understand what the exam is all about. Visit the exam guide to see the contents in detail. You will get a solid understanding of the content you will need to prepare for the exam.

- Visit the official Google Cloud Platform documentation to clearly understand each of the GCP services. These would make your GCP knowledge but you still have another essential factor to cover: “Hands-on experience”.

“No technical certification in the world will be useful for you if you don’t know how to implement it in the real world scenario.”

Exam Experience

Since I don’t have a good working experience in data, I only had a brief understanding of the services and how it works at a high level. Maybe due to that reason, the exam for me was very tough. I thought I was going to fail as I found it difficult to comprehend the questions and the options to match them together and find the correct answer.

I had to re-read the questions and the options before answering and flagged the questions which I didn’t know. After I completed the first cycle, I came back to the flagged questions and spent time answering those questions. After answering all the questions, I reviewed the questions again and changed some of the answers I chose earlier, due to confusion. My heart was beating high the whole time as I was nearing submission. It was only after I saw the PASS result that I was able to breathe in relief.

Resources

- Sathish VJ’s AwesomeGCP Certification Repo

- Ivam Luz’s GCP Checklist

- Google Cloud documentation

- Cloud Skills Boost Learning Path

- Google Cloud Practice Questions

- GCP Data Engineer Coursera Course

- Google Cloud video on Machine Learning

- Official Study Guide by Dan Sullivan

- Dan Sullivan’s Udemy Course

Practice, practice, and practice

==================================================

What is the exam about ?

In this paragraph, we provide an overview of the exam, and the topics that you may encounter.

Duration: 2 hours

Cost: 200$

Number of questions: 50

Sections :

- Designing Data Processing Systems

- Building and Operationalizing Data Processing Systems

- Operationalizing Machine Learning Models

- Ensuring Solution Quality

What are the things you should know before going to the exam ?

Please, KNOW your SQL, here the best FREE resource I could find online for people who want to learn SQL.

Know your Spark and Hadoop very well. It helps ! Here are good resources for those who want to break into those technologies

Otherwise, here is a list ( I tried to make it comprehensive, let me know if I missed something ..) of concepts you need to study and prepare for every section of the exam:

- Section 1. Designing Data Processing Systems : General Skills (Data Modeling, Schema Design, Distributed Systems, latency-throughput-transaction tradeoff, DataViz, Batch and Stream processing, Online and batch predictions, Migration, High Availability and Fault Tolerance, Serverless, Message queues / brokers, workflow orchestration, SQL) — Google Specific Skills (BigQuery, Dataflow, Dataproc, Dataprep, Data Studio, Cloud Composer, Pub/Sub, Data Transfer Service, Transfer Appliance) — Opensource skills (Apache Beam, Apache Kafka, Apache Spark, Hadoop ecosystem, SQL)

- Section 2. Building and Operationalizing Data Processing Systems : General Skills (Managed vs unmanaged, storage costs and performance, Data Lifecycle Management, Data acquisition, Data Sources integration, Monitoring, adjusting and debugging pipelines) — Google Specific Skills (Cloud BigTable, Cloud Spanner, Cloud SQL, Cloud Storage, Datastore, Memorystore) — Opensource skills ()

- Section 3. Operationalizing Machine Learning Models : General Skills (Machine Learning (e.g., features, labels, models, regression, classification, recommendation, supervised and unsupervised learning, evaluation metrics)) — Google Specific Skills (Google ML API e.g. Vision, Speech … , AutoML, Dialogflow, AI Platform, BigQuery ML, GPU vs TPU) — Opensource skills (Spark ML, Kubeflow, Airflow …)

- Section 4. Ensuring Solution Quality : IAM — Security (encryption, Key management) — Legal constraints (HIPAA, GDPR …) — Troubleshooting — Monitoring — Autoscaling — Data Staging, Catalog, Discovery — ACID, idempotent, eventual consistency — Data Lakes — Data Warehouses — Datamart

Time management and exam taking strategies

- Start with the questions having the shortest statements / options

- Answer those that you’re sure about

- Proceed with elimination with other questions

- Flag for review those you’re unsure about

- Have at least 20–30 minutes at the end to review your answers

BEST (opinionated …) Resources to crack the GCP Data Engineer certification exam

Here is a curated list of the best resources for GCP training AND practice

Training

What I’ve personally used:

- Coursera’s Google Data Engineering Professional Certification Program — There is no better way to learn about Google Cloud than hearing from Googlers themselves: Throughout this training, Google Engineers, Content Training Designers and Developer Advocates not only teach you through Google Data Services, they will

Other resources that are popular on the Internet

- A Cloud Guru’s Google Data Engineering Course by Tim Berry is a very good option to prepare for the exam.

- Udemy’s Dan Sullivan course is a very popular option as well

Practice

What I’ve personally used

- QwikLabs : Qwiklabs is an online platform which provides end to end training in Cloud Services. Qwiklabs provides temporary credentials to Google Cloud Platform and Amazon Web Services so that you can get a real-life experience by working on different cloud platforms. They consist of 30-minute individual labs to multi-day courses, from introductory level to expert, instructor-led or self-paced.

- Doing stuff on my console: Google offers you 300$ credit when you try their Cloud Services for the first time.

- WhizLabs GCP Data Engineer Practice exams

Other resources that may help you if you want to dig deeper

- Coursera Guided Projects: https://www.coursera.org/

Introduction

Hi ! This is a short article where I share my thoughts and steps on taking Google Cloud Professional Data Engineer certification exam in one shot in 2023. I will address the following questions:

- What is the exam about?

- Steps I took before going to the exam

- On the exam date

- Official certification

- Conclusion

What is the exam about?

Duration: 2 hours

Cost: 200$

Number of questions: 50

Sections :

- Designing Data Processing Systems

- Building and Operationalizing Data Processing Systems

- Operationalizing Machine Learning Models

- Ensuring Solution Quality

Steps I took before going to the exam

Training (1–1.5 months)

Udemy — GCP — Google Cloud Professional Data Engineer Certification by Ankit Mistry (Cost: $12.99) (Total: 23.5 hours)

Hands-on practice (1 month)

cloudskillsboost (a platform powered by Qwiklabs), Qwiklabs for hands-on GCP (quest is a series of labs)

Quest— Data, ML, AI

I use cloudskillsboost to practice GCP because my company is a partner from Google.

Mock exams (1–2 weeks)

Skillcertpro — Google Cloud Professional Data Engineer Exam Questions 2023 (Cost: $19.99)

Examtopics — Google Professional Data Engineer Exam (My company bought full version with pdf format)

Skillcertpro has more accurate answers than Examtopics, although Examtopics display community voting results and comments. Sometimes both platforms give contradictive answers. Most of the questions in the actual exam are very similar to the mock exams, and I would say 80% similar. No need to worry about the accuracy in your mock exam, I could complete them at 60%+ accuracy every time. In the real test, the requirement to pass is 70%.

Question:

You have some data, which is shown in the graphic below. The two dimensions are X and Y, and the shade of each dot represents what class it is. You want to classify this data accurately using a linear algorithm. To do this you need to add a synthetic feature. What should the value of that feature be?

Skillcertpro: correct answer : cos(x)

Examtopics: correct answer: cos(x)

Examtopics community vote most(77%): x²+y²

Question in Examtopics

You are responsible for writing your company’s ETL pipelines to run on an Apache Hadoop cluster. The pipeline will require some checkpointing and splitting pipelines. Which method should you use to write the pipelines?

A. PigLatin using Pig

B. HiveQL using Hive

C. Java using MapReduce

D. Python using MapReduce

Examtopics give answer D

Examtopics community most vote A

Question with ambiguous answer:

You are deploying MariaDB SQL databases on GCE VM Instances and need to configure monitoring and alerting. You want to collect metrics including network connections, disk IO and replication status from MariaDB with minimal development effort and use StackDriver for dashboards and alerts.

What should you do?

A. Install the OpenCensus Agent and create a custom metric collection application with a StackDriver exporter.

B. Place the MariaDB instances in an Instance Group with a Health Check.

C. Install the StackDriver Logging Agent and configure fluentd in_tail plugin to read MariaDB logs.

D. Install the StackDriver Agent and configure the MySQL plugin.

Skillcertpro answer: A

Examtopics answer : C

Examtopics community vote most: D

On the exam date

My scheduled exam time is 10AM. I woke up early in the morning and skim through mock exams to have better feelings about what will appear in real exam. At the test center, they will ask you about photo ids (passport, and credit card), then I provide a passcode sent by test center. The testing room is much quieter than home. One person for one room. I would recommend taking exam at test center rather than on-line at-home exam.

After you answer 50 questions, a short survey will show up. After the short survery, a pass or fail will show up on the screen without score details. You will receive official certification in your email in-box in 7 to 10 days.

Official certification

I passed my exam at the exam center on Feb 2, got the official email from Google on Feb 10. Once you’ve passed, you’ll be emailed a redemption code alongside your official Google Cloud Professional Data Engineer certification. Congratulations!

Conclusion

I spent some time to search on-line courses, but these are what came out to me. You may adjust accordingly based on your time and what you really want to get out of the certification. I sincerely hope that you are able to pass the exam with these tips and apply these knowledge at your work or side project!

Reference

Jason Tzu-Cheng Chuang [2023] pass Google Cloud Professional Data Engineer Exam

Chouaieb Nemri [2022] pass Google Cloud Professional Data Engineer Exam

Yong Chen Ming [2021] pass Google Cloud Professioonal Data Engineer

===============================================================

Here are 4 example, important things that you must know if you want to be happy with your Google Cloud Proffesional Data Engineer certificate:

- Hotspotting in Cloud Spanner and BigTable

Hotspotting can occur on the Database Server when monotonically increasing keys of the row are in use. In this case data may be stored on one or few servers close with teacher (unwanted). What we want instead is even distribution across all servers.

How do we avoid hot spotting?

- Using UUID (Universally Unique Identifier)

- Using Bit-Reverse Sequential Values Bit-reverse sequential values —

if your primary key is monotonically increasing you can use this solution which will handle reversing the value and get rid of the problem - Swapping Column Order in Keys to Encourage Higher-Cardinality Attributes — as a result, the keys’ beginnings are more likely to be non-sequential.

2. Kafka vs PubSub

- Kafka guarantees message ordering but PubSub not

- Kafka offers tuneable message retention but PubSub in the Standard version only 7 days

- Kafka gives us possibility to implement Pull Subscriptions only while PubSub both Push and Pull

- Kafka is unmanaged while PubSub is a managed service

In general it is recommended to know open source equivallent for each Google Cloud Product required on the exam. Nowadays, there are a lot of migrations from open source systems to the Cloud. Therefore as Data Engineer you should be ready to map those technologies into the correct corresponding product.

Another example is HBase and BigTable.

3. Partition and Clustering in BigQuery

If the common query pattern is to extract data from the previous day and you have one table storing information already 5 years long, isn’t it a waste of processing resources to query the whole table every time in order to get a small subset of data?

Here’s where partitioning comes in handy.

You can divide your table based on a date column. In result you can access a specific partition (date) and you will only be charged for querying this subset of data.

BigQuery Partition types are:

- Ingestion time partitioned tables (especially useful if the table does not contain any date/timestamp column)

- Timestamp partitioned tables

- Integer range partitioned tables

Clustering is the ordering of data in its stored format in BigQuery. When a table is clustered, one to four columns, as well as their ordering, are defined. You can collocate data that is frequently accessed together in storage by picking an appropriate combination of columns. Only partitioned tables support clustering, and it’s essential when filters or aggregations are regularly used.

It might be that your query pattern filters customers on the country and age.

This could be your clustering definition!

4. Windowing in Apache Beam

It’s best if you understand the concept of Windowing and different types, especially Sliding one.

I highly recommend checking the website of Apache Beam. Their creators did a great job delivering a documentation and the Windowing concept is explained very good here: https://beam.apache.org/documentation/programming-guide/#windowing

==========================================

bout Professional Data Engineer:

Exam test on your ability to design, build, operationalize, secure, and monitor data processing systems with a particular emphasis on security and compliance; scalability and efficiency; reliability and fidelity; and flexibility and portability as well he should be able to be able to leverage, deploy, and continuously train pre-existing machine learning models.

Service you should focus on:

Integration

- Pub/Sub: Global event ingestion,how it is different from Kafka when to use what.

- Data Fusion : Real time batch replication from Ops database to DWH pipeline Integration

- Storage transfer service: when to use it ,benefit over gsutil .

Data Processing

- DataPrep: How it can be helpful for no-coder business people .

- DataProc: Moving existing Hadoop job/Spark based without too much change, Hadoop modernization, cost saving benefit methods,when to use Standard disk or SSD, Serverless.

- Data Flow: Understand Apache beam ,different operator used in pipeline, When to use which Windowing and which one(Fixed, tumbling,Session),monitoring pipeline.

- Video intelligence API: Different use case.

- NLP API: Different use case

Data Store

- Bigtable: Different use case ,how to design row key, how to share cluster for different use like production, analytics team,Monitoring instance,key performance,

- Different managed database services : When to use which database, how to design for High availability.

- Cloud storage: Benefit over HDFS, cost saving for longer term storage, integration with Other cloud services.

Data analysis

- BigQuery: Different use case, Pricing model, clustering ,Performance optimization best practice.

- BigQuery ML: Type of model can be used, how to train(Feature preparation,cleaning). How to overcome overfitting and underfitting model,hyperparameter tuning benefit)

Mixed services:

- Cloud Composer : Pipeline orchestration tool ,automation of pipeline ,understand basics of airflow concept.

- Analytics Hub: Different use case like Data syndication, Data monetization

- Cloud IAM: How to control granular access to data by using GCP security recommended practices

- Networking: VPC service limit, private IP.

- Resource Governance: Logical hierarchy of GCP resources.

Resources to Prepare:

https://www.cloudskillsboost.google/ Enroll in Data Engineer Learning Path,Go through Video and Lab

https://cloud.google.com/ : Go for above mentioned product page and read different use case,feature it have,reference architecture

Understand basic architecture of open source competing products.

Try 1–2 practice exams before the final attempt.

==================================

- Print and Study the Exam Guide — get to know the lay of the land. https://cloud.google.com/certification/guides/data-engineer

- Master Exam Sample Questions — Sample Questions — i was skeptical at the simplicty of the questions compared ot other Pro cloud exams but its right on it. This is also a good gauge of readiness. I would not take this until i was 70% read up on services as its a good practice exercise.

- Official Google Cloud Cerfified Professional Data Engineer https://www.wiley.com/en-us/Official+Google+Cloud+Certified+Professional+Data+Engineer+Study+Guide-p-9781119618454 — this resoure was my favorite and a must. I mean its from the source, certified by the source. Bonus - getting this book gives you access to a few online practice tests. Good interface and representiave to the real exam.. I read this book in 1 day’s time.

- QwikLabs (Cloud Skills Boost) for Data Engineer — https://www.cloudskillsboost.google/course_templates/3?utm_source=gcp_training&utm_medium=website&utm_campaign=cgc — i thought content was good and its good to get hands dirty with the key services.

- Whizlabs Practice tests — Whizlabs has 5 tests you can buy for $40, I decided that I needed more test practice. I found these tests overlly specific and soul crushing. I didn't think I got too much out of this other than self doubt, but the good thing about taking these tyopes of exams is that you typically only get a question wrong only once..I took all 5 tests (one after the other) the night before the actual exam. Scored 50% on the last one… Not a good feeling going into the exam. Not sure i would recommend this.

- Read Google Docs on Key Services focused on Security, Cost optimization, Integrations, Availability, Replication, etc.

- ML Crash Course — https://developers.google.com/machine-learning/crash-course this was a great resoure to learn ML

Master Key Services — these are like the Infinity stones of this test. my rough distribution… the point here is that Big Query is the Soul Stone!!

- BigQuery —30%

- Dataflow — 20%

- Big Table — 10%

- PubSub — 20%

- ML — 10%

Exam Reflection

A couple of focus items on exam questions. The exam is broad but knowing these will be very helpful.

- BQ Authorized Views vs Table Permissions — This is a notorious BQ exam question Ive seen everywhere that wants you to select authorized views to segement tables from a datasets. Since this test was created, that has changed. You can now entitle BQ tables more granularly. My advice is to answer the question with intention of authorized views until they update the exam. But thats a personal call..

- BQ Optimization — know how to optimize BQ for cost, performance, and security. Talking about partitioning, clustering, materialized views, bq streaming patterns, caching, availability.

- BigTable — Key design is key (PUN!) including replication strategies.

- CloudSQL\Spanner — when to use each and know limits of services (ie db size)

- Dataflow — know Beam API and when to use each. side inputs vs Pardo — i felt dataflow was pretty deep on test. Know windowing too…you will get 2–3 questions here.

- ML — I didnt get a lot of questions here. The ones I got were very hard. I was shocked. I was amp’d up for ML being featured. I know some services were rebranded (Auto ML to Vertix AI) but exams take time to catch up. The ML Crash course is really good to get good understanding.ie how to tweak neural networks, tune for overfitting\underfitting, how to deploy tensor flow, etc. know algrothithm types and metrics.

Overall, this is a fun, achievable exam, and I feel that the focus here is meaningful to understand what make GCP special — data services. Its a great way to show your organization you are serious on this front and to prove your mastery over these services. For me, I will take a break and celebrate the exam before getting to work on enabling these services.

=========================================================

Google Cloud Certified Professional Data Engineer exam tests your ability to design, deploy, monitor, and adapt services and infrastructure for data-driven decision-making.

The whole preparation took me nearly 2 months , but with some more focus time I could’ve done it in 1 month. Following steps that I have taken to pass the exam :

- Basic concept from udemy course

- Google official video courses(Theory + Practical)

- Books and resources

- Official documentation

- Quizzes/ExamTopics/Mock test

- Cheatsheet

- Notes

- Assessment

- Tips

1. Basic concept from udemy courses

I have followed the course Google Cloud Professional Data Engineer: Get Certified 2022 by Dan Sullivan. I have followed all the classes in this course.As for public it costs 79,99€ and it covers following topics:

- Build scalable, reliable data pipelines

- Choose appropriate storage systems, including relational, NoSQL and analytical databases

- Apply multiple types of machine learning techniques to different use cases

- Deploy machine learning models in production

- Monitor data pipelines and machine learning models

- Design scalable, resilient distributed data intensive applications

- Migrate data warehouse from on-premises to Google Cloud

- Evaluate and improve the quality of machine learning models

- Grasp fundamental concepts in machine learning, such as backpropagation, feature engineering, overfitting and underfitting.

At the end of the course there will be 50 sample questions to solve which gives you 2 hours as like you are in exam.

2. Google official video courses(Theory + Practical)

I recommend to go through all the Professional Data Engineer Google course videos and practicals from the official site.

Official guide for the data engineer exam is here.

This course covers following topics:

- Design data processing systems

- Ensure solution quality

- Operationalize machine learning models

- Build and operationalize data processing systems

It has following 9 major classes with which you can attain a badge from google :

1. Google Cloud Big Data and Machine Learning Fundamentals

2. Data Engineering on Google Cloud

3. Serverless Data Processing with Dataflow Specialization

4. Create and Manage Cloud Resources

5. Perform Foundational Data, ML, and AI Tasks in Google Cloud

6. Engineer Data in Google Cloud

7. Preparing for the Google Cloud Professional Data Engineer Exam

8. Professional Data Engineer

10. Register for your certification exam

I have followed the above courses using Partner account so it was completely free and there were free credits available to solve the lab.

- Linux Academy course (Took 4 weeks + 2 weeks (revision)) — 75% success rate

- Coursera GCP Data Engineering Specialization (6 courses)(Took ~4 Weeks) — 20%

3. Books and resource

I strongly recommend you to going through Official Google Cloud Certified Professional Data Engineer Study Guide [Book] by Dan Sullivan. This study guide offers 100% coverage of every objective for the Google Cloud Certified Professional Data Engineer exam.

This books covers most of the exam topics and there are around 20 quizzes for each chapter.

4. Official documentation

It is also very important to going through the official documentation to get updated information about the google products and services.

For example,sometimes an old mock question’s answers will guide you in a wrong way. Access control on table level is now possible in BigQuery but it wasn’t before. So please dont follow the quiz answers that were provided by default but also I recommend you to do your proper search in official docs , if you have doubt in it.I have used this scenerio as an example and there are other similar cases.

5. Quizzes/ExamTopics/Mock test

Most important part of the preparation is going through as many quizzes as possible and verifing with reasonable answer. To pass the actual exam, you have to spend more time on learning & re-learning through multiple practice tests.

I recommend the following sites for preparing exam:

- Examtopics : There will be robotic check once after 10 questions and we should pay to get rid of those check and which allow you to focus well in the preparation. Please be aware of wrong answers in Examtopics try to do a search by yourself to assure that its the correct one. Cost : FREE

- PassExam : I have also prepared with PassExam which provides right answers for most of the questions with a reason in description and related offical links. Cost : FREE

- WhizLabs : There are around 25 multiple choice questions with what we can able to get insights on the certification and feel a little more confident. Cost : FREE

- Sample Questions fom Google : There are around 25 sample questions from google at the end of its official course.You can get the answer and explanation once you submit the form. But it is not guaranteed to help you pass the exam.

6. Cheatsheet

I recommend you to go through the Cheatsheet once. Cheatsheet is currently a 9-page reference Data Engineering on the Google Cloud Platform. It covers the data engineering lifecycle, machine learning, Google case studies, and GCP’s storage, compute, and big data products.

Going through only this cheatsheet is not guaranteed to help you pass.But it can help you with your revision and refreshing the topics that you have pepared over the period before.

7. Notes

Note taking app : Notion. The best app for note taking.

Notion has helped us to revise effectively before exams on all the essential topics.I recommend to take notes for following topics.

These are the core GCP products that the assessment covers.

But there was some overlap with other Google tools too.

8. Tips

- Preparation is key to success. so many weekends, many holidays, I spent preparing for this exam.

- You can eliminate all the answer that recommend non-GCP solutions.

- As with many multiple-choice exams, eliminate. If you can’t eliminate to one possible answer, make a guess.

9. Assessment

I took the assessment via Kryterion’s Webassessor. Exam Duration: 2 hours, Registration fee: $200 (plus tax where applicable) , Languages: English, Japanese and Exam format: Multiple choice and multiple select taken remotely or in person at a test center.

On exam day, I had to show my surroundings via webcam, turn off my phone, empty my desk, If your name is not visible you should take photo from your mobile a,d then you should show it to the sponser staff at the other end etc. All this took ~ half an hour. I didn’t have to talk to the person behind the camera, but we can chat. The person also monitor you via webcam during the assessment.

I found the questions are bit hard and challenging. In the end, I got a provisional Pass, but it should be confirmed by Google — for whatever reason. That confirmation came 4 –10 days later. So now I am officially Google Certified Professional Data Engineer 🎉 and here is my Official Google Certification!

------------------------------------------------------------------------------

My Background:

First and foremost, I am a Data Engineer with approximately 3.5 years of GCP experience and 4+ overall. I’ve always wanted to become a Google Cloud Data Engineer because it’s the greatest cloud service provider for analytics and offers the most cutting-edge technologies to solve real-world problems. I have hands-on experience with only a handful of products on GCP like Bigquery, Data Fusion, Composer, GCS, Stackdriver, and DataProc. So, while studying for the certification, the majority of the products are still unfamiliar to me.

About the Exam: Google Cloud Certified Professional Data Engineer

- Num of questions: 50

- Exam format: Multiple choice with multiple select (no case study questions)

- Length of the exam: 2 hours (you can leave as soon as you’re finished)

- Registration fee: $200 (plus tax where applicable)

- Validity: 2 years

- Recommended experience: 3+ years of industry experience including 1+ years designing and managing solutions using Google Cloud (not mandatory)

- Languages: English, Japanese.

- Mode of the exam: Online-proctored, and test center proctored

- Results: You will get the result(PASS/FAIL) immediately after clicking the submit button. The official certificate will be sent to you in your registered email inbox in approximately 2–5 working days. (I got it just after the exam day)

- Perks: This is the cool part, once you pass the certification, google cloud will send the voucher code along with the certification as a token of achievement. You can redeem it once and choose any of the available products.

Learning Track

The Basics:

- Read the exam overview of the Professional Data Engineer

- Read the exam guide for the Professional Data Engineer

- Understand the products offered by Google Cloud that are relevant to the topics in the exam guide. If you’re new to google cloud, then it is advised to go through a GCP fundamentals course.

Generic Learning Path:

Once you got the gist of all the products that use as a Data Engineer in GCP, it’s time you put that knowledge to work i.e. practice labs. There are many learning platforms that offer practice labs (qwiklabs) through in-depth explanations. Out of all the resources, below are a few:

- Official Google Cloud Certified Professional Data Engineer Study Guide

- Online resources like Acloudguru, Pluralsight, and Coursera

- Official Google Cloud Documentation

- GCP PDE sample questions

- Practise Tests (do it until you get 90% in every exam): Dan Sullivan — Udemy, Whizlabs

My Study Guide:

- GCP fundamentals course by Janakiram MSV: For understanding the GCP basics.

- Data Engineering, Big Data, and Machine Learning on GCP Specialization

- Official Google Cloud Documentation

- GCP Study Guide by Ivam Luiz

- GCP Data Engineering articles on Medium

- Practise Tests: I have done 8 practice tests in total to compensate for my short study time of 3 weeks(High-intensity training lol)

- Google Data Engineering Cheatsheet by Maverick Lin

- In a minute Videos by Google Cloud Tech: They are your best friends on the day before the exam to quickly revise all the concepts.

Before starting the preparation for certification, the first thing I did was to write down my overall understanding of GCP, like what are the concepts I was confident in, the products I have good working experience on, and the architectures I worked on GCP. This gave me an overall idea of my expertise in GCP. After the evaluation, it was evidently not enough, when I attempted the sample exam, I got 60 % of the questions right and most of the wrong answers were on the best practices. So, I have drawn out a 3-week plan for the preparation.

Week-1:

Back in 2020, I finished Data Engineering, Big Data, and Machine Learning on GCP Specialization, so I quickly rewatched most of the videos on 2X. After this, I gave one practice test on whizlabs and got 45%. As usual, most of the wrong answers are on best practices, pricing, and connecting multiple google products with each other.

I decided to focus more on the best practices, performance optimization, and cost optimization concepts of the famous GCP products used in Data Engineering like Bigquery, Bigtable, Dataproc, Dataflow, and relational databases. In the first week of the preparation, I managed to finish Storage, Databases, Analytics (except ML), and my score immediately improved in most of the practice tests. It’s good to see the progress as it boosts your intent to study even more.

Week-2:

In the second week, I mostly focused on the ML part of the certification as 35% of the questions in PDE are related to GCP ML services and their usage. I covered all the concepts and their use cases with best practices in 3 days and started giving the practice tests on these concepts. Here, the ML part of the questions is basically about the scenarios and which GCP product to use and most of them are on ML features and operations.

For ML, I studied mostly the official documentation(I have no prior knowledge of ML), everything was cut to the chase, and I suggest you do the same. Also, few blogs on ML terminology and features as I was very new to ML.

Week-3:

The final week was crucial for me to understand the concepts of compute, networking, monitoring, security, migration, and data transferring services along with the revision of week 1&2. Most of the practice questions on these concepts are a mix of services and if you have a better understanding of architectural flows, it’ll sure come in handy to clear most of the questions.

I did two practice exams two days before the exam and scored 85 and 95, I was confident about clearing the exam. Just a day before the exam, I went through the Google Data Engineering Cheatsheet by Maverick Lin, this has almost everything covered on a general basis, with most of the products with their best use cases. Next, I watched in a minute video on Google Cloud Tech Channel on Youtube (to quickly revise all the concepts).

Tips for the Exam

- Try doing as many practice tests as possible, it will give a practical approach towards solving the questions as well as increases your speed of answering.

- Google wants to test us on their major products and sometimes a mixer of Data engineering recipes, so it’s good to check a few articles on Data Engineering best practice blogs.

- If you’re confused always choose the GCP product instead of the other solutions, after all the exam is on Google Cloud.

- The exam questions are similar to sample questions but 3–4 X (complex and harder).

- Get a clear understanding of Monitoring, they always mix up with other GCP services like BQ, and storage.

- Understand the ML terminologies and vocabulary, I had trouble doing the ML questions during practice tests and it helped in the later stages.

I have created product-specific notes and also the important topics to cover from every GCP offering on PDE. You can find it here.

During the exam

- The invigilator will set up everything for you, and then you start the exam by agreeing with the term and conditions. Just keep the keyboard as far as you can as most of the computers, they use on the proctored exam are very old.

- There will be panic, tension, and fear, but no worries, if you have done the practice tests, you’ll be okay answering even the unknown questions because of the practical approach you’ve been doing during those practice tests.

- If you’re stuck on any question, don’t waste too much time, just mark it for review and move on to the next one.

- Always go with your instincts while answering a confusing question(you’re prepared for this, the multiple practice tests you took will do the magic here)

After the exam

- When you finish the exam, you will only be given a pass or fail grade.

- On your screen, it shows that Google will take 7–10 days to send your official results, but for me, it took only 14 hrs.

- With the official results, you’ll also receive a redemption code along with the official Google Cloud Professional Data Engineer Certificate and a badge.

- You can redeem the code in Google Cloud Certification Perks Webstore and choose any one of the available perks. I chose a grey hoodie and still waiting for its delivery.

Learn from fellow certified Data Engineers:

- Sathish VJ — Github Repo for GCP PDE

- GCP PDE in 4 days — Sai Krishna

- How I Passed the Google Cloud Professional Data Engineer Certification Exam — Daniel Bourke

- How to Crack the Google Cloud Professional Data Engineer Exam in 1 Month — Revannth V

- How to pass the GCP Professional Data Engineer exam in 2 months — Ting Hsu

- How to pass the Cloud Architect and Data Engineer GCP certifications — Ivam Luz

- How to Prepare for GCP: Professional Data Engineer Exam — Hardik Rathod

Conclusion:

My main takeout of this process was the knowledge I gained out of the GCP products and its analytics offerings, also the certification looks good on my resume. By the time when you’re at the end of the preparation, you will have everything that can make you an architect in designing Data Engineering Pipelines. The journey of preparing for this exam will take you through multiple real-life use cases and test your knowledge in some of the trickiest situations. This preparation has changed my idea of understanding of the Data engineering services in GCP, I hope you’ll go through the same.

No comments:

Post a Comment