A set of useful pandas tools to successfully load and transform a JSON file

Loading and doing Transformations over a JSON (JavaScript Object Notation) file is something pretty common in the Data Engineering/Science world.

JSON is a widely used format for storing and exchanging data. For example, NoSQL database like MongoDB store the data in JSON format, and REST API’s responses are mostly available in JSON.

Although JSON works great for exchanging data over a network, if we intend to process the data, we would need to convert it into a tabular form, meaning something with columns and rows.

Now, in general terms, we can encounter two types of JSON structures in a file:

- a JSON object

- a list of JSON objects

In this article, we will focus on the second one (a list of JSON objects), as I understand it’s the most common scenario and more importantly, by learning to deal with the list scenario, we can then easily deal with the single JSON object one.

To deal with a list of JSON objects we can use pandas, and more specifically, we can use 2 pandas functions: explode() and json_normalize().

Let’s explain each one briefly and then move over to a full example.

This article goes as follows:

- Explain briefly the explode() function

- Explain briefly the json_normalize() function

- Use both of the in a full example to create a cured Data Frame.

- Do some transformations

- Dump the transformed data as CSV

Please check out the Notebook for the source code.

1. Explode

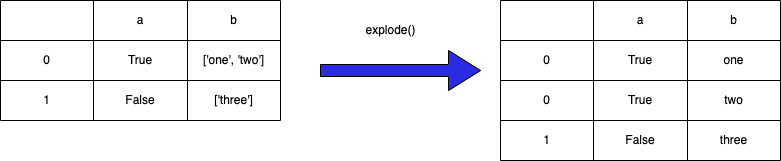

The explode() function is used to transform each element of a list like value to a row, maintaining the old index value for the new elements.

Like this:

The function uses the following syntax:

df.explode('column_to_explode', ignore_index=False)we can also send a list of columns to explode:

df.explode(['col_1', 'col_2'], ignore_index=False)It would look something like this

Now let’s explode by col_2

Notice how the index of the exploded row is kept.

2. JSON Normalize

The json_normalize() function does what we call flattening over a semi-structured column. Meaning, it creates new columns for each property of the structure.

This is the syntax:

data = [

{

"id": 1,

"name": {

"first": "Coleen",

"last": "Volk"

}

},

{

"name": {

"given": "Mark",

"family": "Regner"

}

},

{

"id": 2,

"name": "Faye Raker"

},

]pd.json_normalize(data)or within a column of your Data Framepd.json_normalize(df['a_column'])

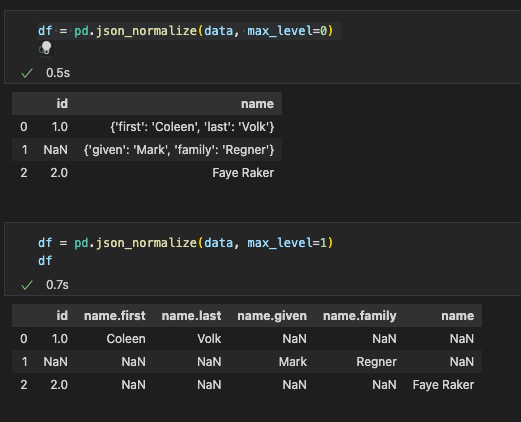

Let’s se it:

As we can see, it created a Data Frame from our raw JSON, taking each property from the root as a column.

Nested properties are the interesting part here. JSON normalize takes each one and creates a column maintaining its original path.

ie.

"name": {

"first": "Coleen",

"last": "Volk"

}becomesname.first, name.last

We should also notice that normalize takes all sub parameters found of “name” (first, last, given, family and a plain string) and for each one creates a column; filling with NaN for missing values.

We can choose the separator

pd.json_normalize(data, sep="<our_sep>")

We can also control the level of the JSON until which we want to normalize

pd.json_normalize(data, max_level=<my_int_level>)where…

Let’s see that in action

3. Let’s look at a full example

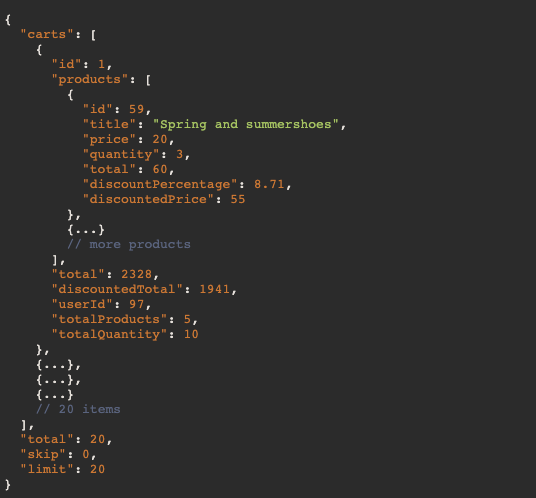

As an example, we are going to use a list of cart items from an e-commerce store from the dummyjson api.

Suppose we have a list of shopping carts we need to process in some way.

Let’s say we have this JSON in a raw format in some blob we need to extract and transform:

In this example, we have a main object with the carts property, which inside has the list we need.

Let’s say we need to extract the cart products or items and do the following transformations over it and output the result into AWS S3 for further processing or storage:

- Create a new boolean column called big_sale based on the total of a product and a threshold of what constitutes a big sale.

- Add a <processed_ts> timestamp to each row.

If we load the cart data into a pandas Data Frame and explode the products column, it would look like this:

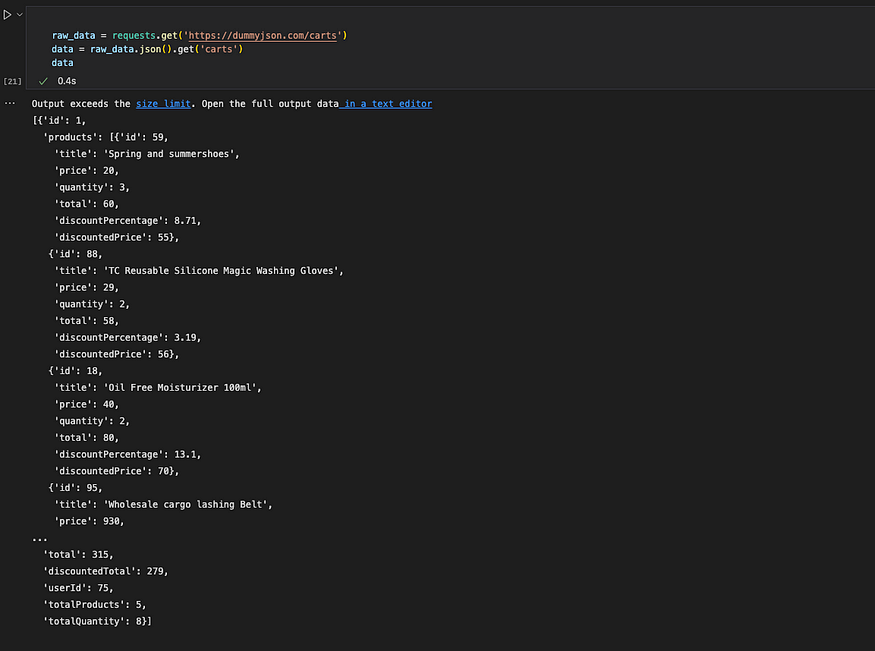

We loaded the data using the requests library. Then into pandas:

Looks good, now we have a row for each product in each cart. Notice the cart id repeats, thats what we want for now…

We now need to deal with the JSON format in which the products are in

For this, we are going to use the json_normalize from before to normalize the JSON data and join the result to our exploded Data Frame. Let’s take a look at that

Now we have a column for each JSON property of the products column

Next, we could join both exploded and normalized Data Frames to get a full representation of the JSON in one Data Frame. But there is a small issue we need to deal with first:

The index of the exploded_df is wrong, we need to reset it to join by index.

So, we do the following:

- remove the old products column from the exploded Data Frame

- reset the index on the exploded Data Frame

- join both exploded and normalized Data Frames

Let’s go

First we remove the old products column and then we reset the index.

exploded_df.drop(['products'], axis=1, inplace=True)exploded_df.reset_index(inplace=True)

Notice the indexes now match.

Before we can join, we need to deal with two columns that overlap.

The columns id and total exist in both exploded and normalized dfs. For this, when we join we will use the suffix options of panda’s join.

Let’s see that

joined_df = exploded_df.join(normalized_df, lsuffix='_cart', rsuffix='_product')

perfect, now we can do some transformations…

4. Transformations

We need to do two things:

- Create a new boolean column called big_sale based on the total of a product and a threshold of what constitutes a big sale.

- Add a <processed_ts> timestamp to each row.Let’s go…

We will set a threshold to 100 for big sale (≥ is big) and using the apply() function of pandas we will create a new Series for the big_sale column.

Note that the total column for the product is called total_product after the join.

threshold = 100big_sale_col = joined_df.apply(lambda row: row.total_product >= threshold, axis=1)

now we can add it to our joined Data Frame

joined_df['big_sale'] = big_sale_col

Great, now for the next transformation, let’s simply add a new column with the current date as timestamp.

from datetime import datetimenow = datetime.now()

timestamp = datetime.timestamp(now)joined_df['processed_ts'] = timestamp

Don’t forget to import datetime

Great! We have everything. Let’s export the data

5. Dump the transformed data as CSV

Obviously, this is an example, in a real-world scenario we would need to output the processed data into the cloud and maybe not CSV but parquet or any other columnar format.

Still, following the example, let’s output this as a processed.csv file locally

joined_df.to_csv('processed.csv', index=False)

Conclusion

Dealing with a raw JSON file is a pretty common real life scenario, and pandas is great for this.

By combining both explode and normalize, we can get a JSON file into a Data Frame for processing. This works great for processing data coming from, for example, an API which is usually in JSON format.

Granted, exploded is only needed when dealing with a list of objects, but this is a fairly common thing to have. Anyway, if your JSON doesn’t need it, the json_normalize() function would be enough for you to process your JSON.

Let’s summarize what we did:

- Loaded a JSON into a Data Frame

- Exploded the list like columns

- Normalized the JSON format columns

- made some simple transformations

- exported the data

Thanks for reading!

Please check out the notebook for the source.

No comments:

Post a Comment