The main driving goal of Apache Spark is to enable users to build big data applications via a unified platform in an accessible and familiar way. Spark is designed in such a way that traditional data engineers and analytical developers will be able to integrate their current skill sets, whether that be coding languages or data structures with ease.

But what does that all mean and you still haven’t answered the question!

Apache Spark is a computing engine which contains multiple API’s (Application Programming Interfaces), these API’s allow a user to interact using traditional methods with the back-end Spark engine. One key aspect of Apache Spark is that it does not store data for a long period of time. Data can be notoriously expensive to move from one location to another so Apache Spark utilises its compute functionality over the data, wherever it resides. Within the Apache Spark user interfaces, Spark tries to ensure that each storage system looks reasonably similar so that applications do not need to worry about where their data is stored.

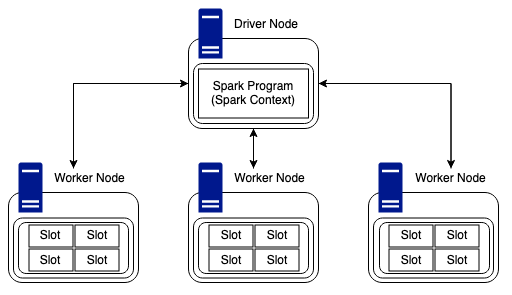

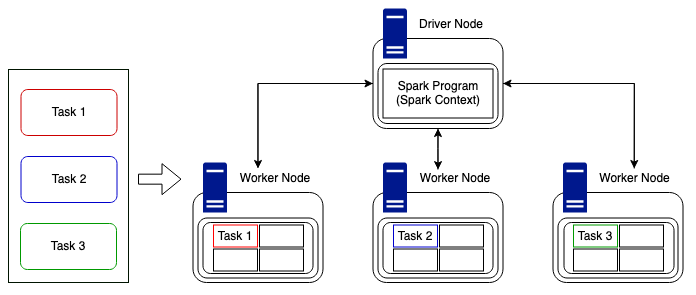

This compute engine allows a user to create an end to end solution which utilises the distributed capabilities of multiple machines all working towards a common goal. This could be large scale aggregations, machine learning on large data sets or even streaming of data with massive throughput. Most of these problems are commonly reffered to as “Big Data”.

Why is Big Data a Problem?

Big data has many definitions, but one that stuck with me is:

If data is too big to fit onto your device, it’s Big Data!

The term “big data” has been around for many, many years. So being able to do analysis on this data has been a challenge, almost since computing began! There have been many engines and systems built to help manage big data and they all utilise different methods to increase speed, efficiency and reliability. Parallel compute was one of these developments. As technology advances, the rate at which data is being collected is increasing exponentially, it is also becoming progressively cheaper to collect data. Many institutions are collecting data for the sake of collection and logging, so, being able to answer business critical questions from this data was, and still is becoming more and more expensive with the ageing compute engines that were available before Apache Spark.

Spark was developed to tackle some of the world’s largest big data problems, from simple data manipulation all the way through to deep learning.

Who Created it?

Spark was initially started by Matei Zaharia at UC Berkeley’s AMPLab in 2009, and open sourced in 2010 under a BSD license.

In 2013, the project was donated to the Apache Software Foundation and switched its license to Apache 2.0. In February 2014, Spark became a Top-Level Apache Project.

In November 2014, Spark founder M. Zaharia’s company Databricks set a new world record in large scale sorting using Spark.

Spark had in excess of 1000 contributors in 2015, making it one of the most active projects in the Apache Software Foundation and one of the most active open source big data projects.

Spark Libraries:

There are a multitude of libraries available in Apache Spark, many of which are developed directly by the Spark community. These libraries include but are not limited to:

- MLlib

- Spark Streaming

- GraphX

These libraries have been developed in such a way that they are interpretable, meaning they enable the writing of end-to-end solutions in the same engine environment for the first time.

Spark Language Capabilities:

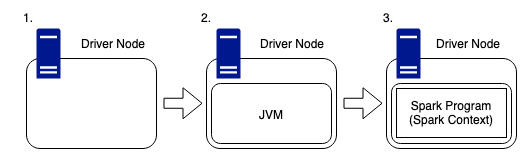

Spark has the capabilities to allow the user to write code in Python, Java, Scala R or SQL, but languages such as Python, and R require the user to install an instance of their relevant interpreter. All Python, R, Scala and SQL are compiled down into Java. This is because the code you write runs inside of a JVM (Java Virtual Machine). So regardless of where you run your Spark instance, all you need is an installation of Java.

Apache Spark can be run in a multitude of ways. Locally is quite common for general development use and for hobbyists, however, in production most instances are developed in a cloud environment. An example of this would be to use AWS EMR to manage node (driver and worker) instances, Azure HDInsight or to use an online notebook experience in the form of Databricks.

- https://aws.amazon.com/emr/

- https://azure.microsoft.com/en-gb/services/hdinsight/

- https://community.cloud.databricks.com/

Whether Spark is run locally or in a cloud environment the user is able to access the different interactive shells available via a console or terminal. These come in the form of:

- Python Console

- Scala Console

- SQL Console

However one difference is the Databricks notebook environment, which is a similar experience to the user interface provided by Jupyter. The Databricks environment provides plenty of extra functionality built into the platform which allows the user to maximise their productivity, by having access to all these features in one place.

Latest Spark Release:

The latest Spark release can be found here:

Finally

This section is quite lightweight in regards to depth, but, it lays out some scope for the sections to come. These further sections will dive deeper into each aspect, such as, architecture, libraries and data structures.

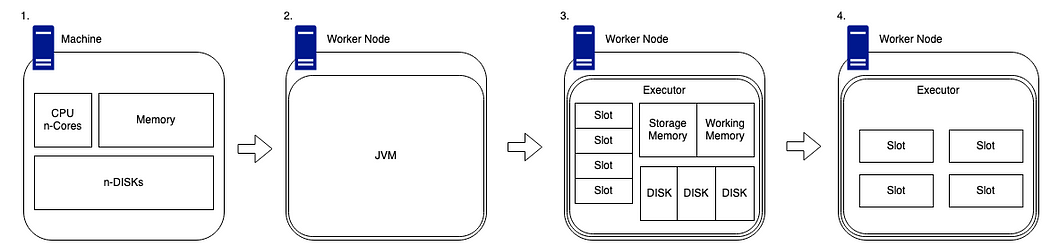

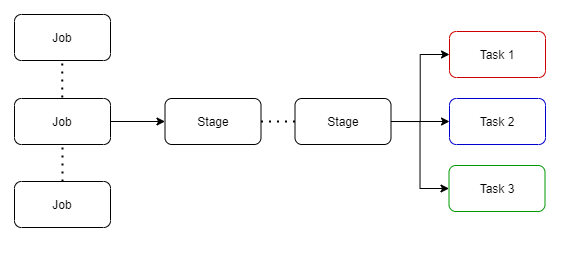

The next section will cover an in-depth look into how Apache Spark runs on a cluster and how the physical architecture works alongside the runtime architecture.