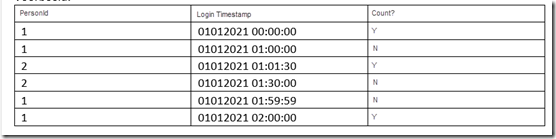

A nice SQL challenge was presented to me by a colleague. The challenges basically consisted of this table. A table contains records that describe login events. Each record has a login timestamp and the identifier of the person logging in. The challenge is to count “unique” login events. These have been defined as unique, non-overlapping two hour periods in which a person has logged in. Such as period starts with a login that is at least two hours after the start of a previous period for that same person.

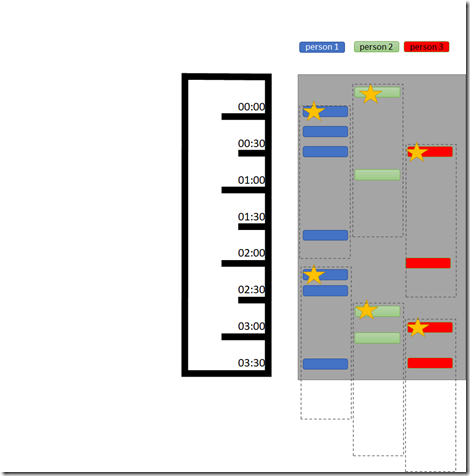

Visually this can be shown like this:

You can follow along with this challenge in the live database environment offered by LiveSQL at this link.

All the records marked with a star are counted. All other records are within 2 hours from a starred record and are therefore excluded because they are considered duplicate logins

I followed the following logic while constructing a solution for this challenge:

- For each login record, find the first login record of the same person in the period of two hours prior to the record; that can be the record itself, but could also be an earlier login record

- All records that are the first in a two-hour period are “block starters”, records that start a two-hour period in which we can ignore all subsequent logins for that same person (these block starters are definitely to be included in the final result)

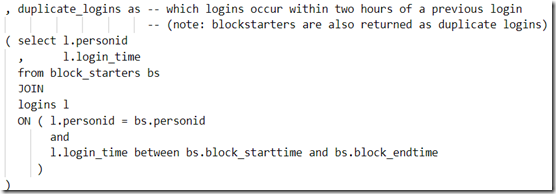

- Find all records (we call them duplicate_logins) that fall into a two hour login block for a person (a two-hour period from the login time of a block starter); these are logins that we should not count

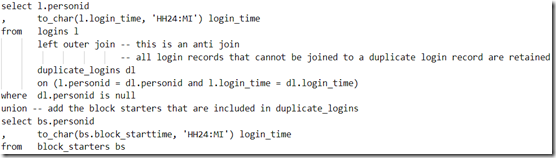

- Select all records that are not a duplicate_login – these are records that may have a preceding login in the two hours before their login timestamp but that login is within a two hour block and they are not. Note: since the block_starters are also counted as duplicate_login, they must be added separately to the result

Each bullet is implemented in the query with an inline view:

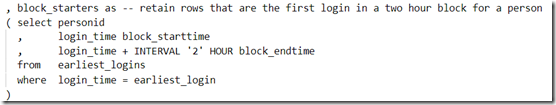

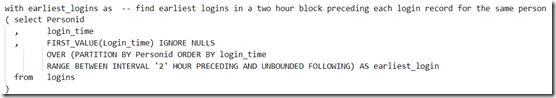

earliest_logins: For each login record, find the first login record of the same person in the period of two hours prior to the record; that can be the record itself, but could also be an earlier login record

block_starters: All records that are the first in a two-hour period are “block starters”, records that start a two-hour period in which we can ignore all subsequent logins for that same person (these block starters are definitely to be included in the final result)

duplicate_logins: Find all records (we call them duplicate_logins) that fall into a two hour login block for a person (a two-hour period from the login time of a block starter); these are logins that we should not count

and finally:

Select all records that are not a duplicate_login – these are records that may have a preceding login in the two hours before their login timestamp but that login is within a two hour block and they are not. Note: since the block_starters are also counted as duplicate_login, they must be added separately to the result

The last step that should be added is of course the count operation (select l.personid, count(login_time) from final group by personid).

Overall query and result

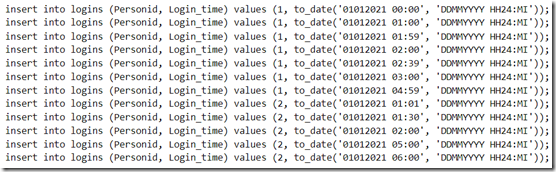

This result is for this set of records:

Resources

LiveSQL – demo at this link.

Anti Join in SQL – my earlier anti search pattern exploration https://technology.amis.nl/it/anti-search-patterns-sql-to-look-for-what-is-not-there-part-one/

As by my understanding of the original challenge description, I think that this solution does have a problem.

Specifically, for your sample data set, the rows to be counted should be as follows:

PERSONID LOGIN_TIME COUNT_YN

——————————————-

1 2021-01-01 00:00 Y

1 2021-01-01 01:00

1 2021-01-01 01:59

1 2021-01-01 02:00

1 2021-01-01 02:39 Y

1 2021-01-01 03:00

1 2021-01-01 04:59 Y

2 2021-01-01 01:01 Y

2 2021-01-01 01:30

2 2021-01-01 02:00

2 2021-01-01 05:00 Y

2 2021-01-01 06:00

12 rows selected.

In other words, for personid = 1 the solution should return 3 rows only, and not 4.

Depending on whether the 2 hours interval limit is inclusive or not, the solution should include either the row with time 02:00

( if the 2 hour limit is exclusive ) or the row with time 02:39 (if the 2 hour limit is inclusive),

but, anyway, it cannot contain the row with time 03:00, which is less than 2 hours after the previously included row.

The problem is that, for personid=1, the “block_starters” subquery only returns the first row (time 00:00),

while in fact there are 3 rows

It looks to me that the problem cannot be solved without using one form or another of a “procedural” approach.

Here are 3 solutions, using 3 different approaches ( considering the 2 hours interval as being inclusive ):

——————————————

a. Using a recursive WITH query

——————————————

with recurs (personid, lvl, login_time, last_time, rn) as (

select personid,

1 lvl,

min(login_time),

min(login_time) + interval ‘2’ hour,

1

from logins

group by personid

union all

select r.personid,

r.lvl + 1,

l.login_time,

l.login_time + interval ‘2’ hour,

row_number() over(partition by r.personid

order by l.login_time) rn

from recurs r,

logins l

where l.personid = r.personid

and l.login_time > r.last_time

and r.rn = 1

)

—

select personid, login_time

from recurs

where rn = 1

order by personid, login_time

/

PERSONID LOGIN_TIME

——————————–

1 2021-01-01 00:00

1 2021-01-01 02:39

1 2021-01-01 04:59

2 2021-01-01 01:01

2 2021-01-01 05:00

5 rows selected.

——————————————

b. Using MATCH_RECOGNIZE

——————————————

select personid, login_time

from logins

match_recognize (

partition by personid

order by login_time

measures

match_number() mn,

strt.login_time as login_time

one row per match

pattern (strt same*)

define

same as (login_time <= strt.login_time + interval '2' hour)

)

/

PERSONID LOGIN_TIME

——————————–

1 2021-01-01 00:00

1 2021-01-01 02:39

1 2021-01-01 04:59

2 2021-01-01 01:01

2 2021-01-01 05:00

5 rows selected.

——————————————

c. Using the MODEL clause

——————————————

select personid, login_time

from (

select *

from logins

model

partition by (personid)

dimension by (row_number() over (partition by personid

order by login_time) as rn)

measures (

login_time,

'N' count_yn

)

rules (

count_yn[any] order by rn =

case

when cv(rn) = 1 then 'Y'

when max(case when count_yn = 'Y'

then login_time

end) [rn < cv()] + interval '2' hour < login_time[cv()] then 'Y'

else 'N'

end

)

)

where count_yn = 'Y'

/

PERSONID LOGIN_TIME

——————————–

1 2021-01-01 00:00

1 2021-01-01 02:39

1 2021-01-01 04:59

2 2021-01-01 01:01

2 2021-01-01 05:00

5 rows selected.

Thanks a lot for your attention & Best Regards,

Iudith Mentzel

No comments:

Post a Comment