The rise of Large Language Models (LLMs) has generated significant excitement in the tech community. However, the real value lies in turning this innovation into practical, high-impact applications — especially in fast-paced, user-facing environments like quick commerce.

At Z, where a majority of our business is driven by in-app search, every query matters. A misspelled or poorly understood query can mean the difference between a successful purchase and a dropped session. In a landscape where users type in a mix of language & script variants — often phonetically or on the go — getting query understanding right is not just a nice-to-have; it’s mission-critical.

This blog dives into one such deceptively simple but deeply impactful problem: detecting and correcting misspelled multilingual queries, particularly those written in English. We will walk through how we built a robust spell correction system using LLMs — tailored to the nuances of our user base and designed to meaningfully improve search relevance, user experience, and ultimately, conversion.

With query correction, relevance skyrocketed — from 1 in 4 results being eggs to 4 out of 4.

The Core Challenge

The task of detecting and correcting multilingual misspellings is complicated by the fact that many of our users input queries in vernacular languages using English lettering (e.g., typing “kothimbir” for “coriander”, “paal” which stands for “milk” in tamil). Most existing language models are built around native scripts, making it difficult to accurately understand and correct such queries.

Our Approach: Building an MVP with Llama3

We began by prototyping a multilingual spell corrector using API-based LLM playgrounds. After testing multiple models, we selected Meta’s Llama3–8B model, which provided a good balance of performance and accuracy for our use case.

The next step was to scale this solution. Relying on external APIs posed two major challenges: cost and reliability. To mitigate this, we hosted the Llama3 model on in-house Databricks’ model serving layer, which allowed us to integrate it with Spark jobs, ensuring high throughput and parallel access.

Fine-Tuning for Accuracy

After deploying the model, we turned to instruct fine-tuning — a lightweight yet powerful alternative to full model fine-tuning. Instead of modifying model weights, we focused on prompt engineering and system instruction design to teach the model specific behaviors like spelling correction, vernacular normalization, and disambiguation of mixed-language inputs.

We experimented with:

- Role-specific system messages: We explicitly instructed the LLM to behave as a multilingual spell corrector, helping it narrow its focus and reduce hallucinations. For instance, we defined its role as “You are a spell corrector that understands multilingual inputs.”

- Few-shot examples: We included a diverse set of input-output pairs across English and vernacular queries (e.g., “skool bag” → “school bag”, “kothimbir” → “coriander leaves”) to demonstrate expected behavior, enabling the model to generalize better to unseen inputs.

- Stepwise prompting: Instead of asking the model to directly return the final corrected query, we broke down the process into intermediate steps like: (1) detect incorrect words, (2) correct them, and (3) translate if needed. This decomposition improved the model’s accuracy and transparency.

- Structured JSON outputs: To make downstream integration seamless, we enforced a consistent output schema — e.g., { “original”: “kele chips”, “corrected”: “banana chips” }. This reduced parsing errors and ensured clean handoff to our ranking and retrieval systems.

This iterative instruct-tuning significantly improved task accuracy without increasing inference cost. It also allowed us to evolve the model quickly across use cases without retraining.

However, one issue that surfaced was the treatment of brand names. The model would often flag brand names as misspelled words. Initially, we included a list of brands in the prompt itself, but this increased the context length and inference time, reducing efficiency.

Enter RAG: Retrieval Augmented Generation

To overcome the limitations of static prompts and reduce dependency on long context windows. We implemented Retrieval Augmented Generation (RAG) — a paradigm that enhances LLM performance by grounding it with relevant, real-time contextual information from an external knowledge base.

Our Architecture

We designed a two-stage architecture:

- Semantic Retrieval Layer

Each incoming user query is converted into an embedding using a multilingual embedding model. This embedding is matched against product embeddings stored in a Vector DB using Approximate Nearest Neighbor (ANN) search. - Contextual Prompt Construction

The retrieved product results — including titles, brand names, and spelling variants (autosuggestion corpora) — are filtered, deduplicated, and compiled into a dynamically constructed prompt, which is passed to the LLM for correction.

Why This Matters

Each component of this setup was built to address real-world challenges specific to a multilingual, quick commerce setting:

1. Robustness to Noisy Inputs

The retrieval engine operates in semantic space, so even severely misspelled or phonetically written queries return meaningful results.

- Example:

Query: “balekayi cheeps”

→ Retrieved: { “title”: “Banana Chips”, “brand”: “Haldiram” }

→ Corrected: “banana chips” - Example:

Query: “kottimbeer pudina”

→ Retrieved: [“kothimbir (coriander)”, “pudina (mint)”]

→ Corrected: “coriander and mint leaves”

This helps us bypass brittle rule-based or edit-distance based approaches, which often fail in multilingual spelling variants.

2. Brand Awareness

Earlier, we tried stuffing the prompt with a hardcoded list of brands to prevent the model from “correcting” them. This bloated the prompt and degraded latency. With RAG, we inject only query-relevant brand terms.

- Example:

Query: “kellogs cornflex”

→ Retrieved: { “brand”: “Kellogg’s”, “product”: “Corn Flakes” }

→ Model output: “Kellogg’s Corn Flakes” - Example:

Query: “valentaz”

→ Retrieved: { “brand”: “Valentas”, “category”: “cardiac medication” }

→ Model confidently corrects: “Valentas”

3. Prompt Efficiency & Latency Reduction

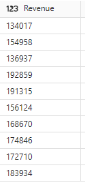

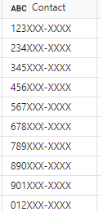

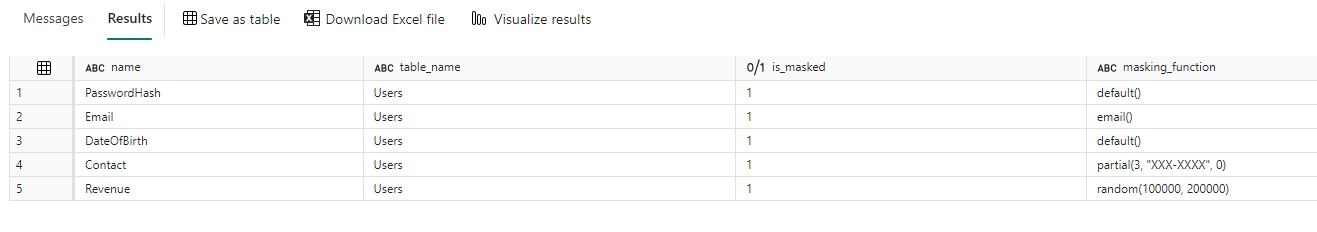

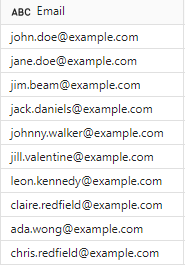

By including only the top-k retrieved entries (e.g., top 5 product variants), we keep the token count low while injecting high-precision signals. This reduced prompt size by 30–40% compared to static dictionary-based prompts and lowered average inference latency by ~18s for a batch of 1,000 queries in our benchmarks.

- Before RAG:

Prompt token count ~4,500+ tokens (with entire catalog dictionary & brand list) - After RAG:

Prompt token count ~1,200–1,400 tokens (only contextually relevant)

4. Dynamic Learning Capability

RAG enables rapid adaptation as the catalog evolves. If a new product or brand enters the catalog, it’s automatically included in the recall without needing to retrain or reconfigure the prompt.

- Example:

When we introduced “Sundrop Superlite Advanced Oil”, users began typing “sundrap oil” or “sundorp advanced”. These were dynamically grounded using vector retrieval without any manual intervention.

Outcome

This RAG-enhanced setup transforms our LLM into a domain-aware, multilingual spell corrector that:

- Understands noisy, phonetically typed inputs

- Differentiates brands from regular words

- Adapts to evolving product catalogs

- Delivers corrections with low latency and high precision

This system is now a core enabler of our vernacular query understanding pipeline, and a blueprint we’re extending to adjacent use cases like voice-to-text query correction and personalized product explanations.

Leveraging User Reformulations as Implicit Feedback

In parallel to the LLM-driven correction pipeline, we also incorporate user behavior signals to identify likely spelling corrections. Specifically, we monitor query reformulations within a short time window — typically within a few seconds of the initial search.

If a user quickly reformulates a query (e.g., from “banan chips” to “banana chips”) and the reformulated query shows a higher conversion rate, we treat it as a strong implicit signal that the original query was misspelled. These reformulation pairs help us:

- Auto-learn new misspelling variants,

- Improve prompt coverage and examples,

- Enrich training datasets for future supervised fine-tuning,

- And reinforce the spell corrector’s decision-making in production.

This behavior-driven feedback loop operates in the background and complements our LLM + RAG architecture with real-world user corrections — grounding the model in observed search behavior.

We have been building several other iterations to continuously improve the model, which we plan to cover in the future sequel to this blog.

Results: Speed, Accuracy, and Scalability

By fine-tuning our prompts and using RAG, we achieved a solution that was both fast and accurate. Hosting the model on Databricks ensured scalability, while instruct fine-tuning and RAG minimized costs and maintained high performance.

Impact

The implementation of this multilingual spell corrector had a significant positive impact on user experience and business metrics. By addressing incorrect, multilingual, and mixed grammar queries, we observed a 7.5% increase in conversion rates for the impacted queries. This improvement highlights the critical role that accurate search query understanding plays in driving user engagement and overall performance in a quick-commerce setting. The project not only enhanced the search experience but also contributed to the bottom line by helping users find the right products more efficiently, leading to increased sales and customer satisfaction.

Key Takeaways for Building with LLMs

- API Access vs. Self-Hosting: While API-based LLM services are great for prototyping, hosting models internally can provide better scalability and cost control in production.

- Instruct Fine-Tuning: Fine-tuning via instruct prompts, rather than the entire model, can save resources and improve task specificity.

- RAG for Efficiency: Retrieval Augmented Generation helps reduce context size and improve efficiency by providing only the necessary information to the model.

- Multi-Task Learning: LLMs can handle multiple tasks with careful prompt engineering, breaking down complex tasks into smaller, more manageable steps.